Now available for digital download, a new book from Nick Hunn, Chair of the Bluetooth SIG Hearing Aid Working Group, Vice Chair of the Generic Audio Working Group, and a key contributor to the Bluetooth LE Audio specifications, provides an in-depth, technical overview of the Bluetooth LE Audio specifications, illustrates how they work together, and shows you how to use them to develop innovative new applications.

The following is an excerpt from Hunn’s book that looks at the use cases Bluetooth LE Audio will support.

2.1 The Use Cases

In the initial years of Bluetooth® LE Audio development, we saw four main waves of use cases and requirements drive its evolution. It started off with a set of use cases which came from the hearing aid industry. These were focused on topology, power consumption and latency.

2.1.1 The Hearing Aid Use Cases

The topologies for hearing aids were a major step forward from what the Bluetooth® Classic Audio profiles do, so we’ll start with them.

2.1.1.1 Basic Telephony

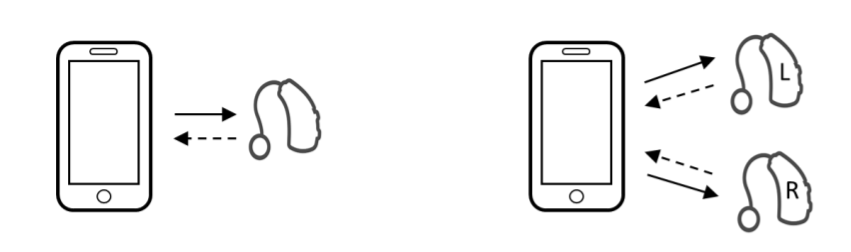

Figure 2.1. shows the two telephony use cases for hearing aids, allowing hearing aids to connect to phones. It’s an important requirement, as holding a phone next to a hearing aid in your ear often causes interference.

The simplest topology, on the left, is an audio stream from a phone to a hearing aid, which allows a return stream, aimed primarily at telephony. It can be configured to use the microphone on the hearing aid for capturing return speech, or the user can speak into their phone. That’s no different from what the Hands-Free Profile (HFP) does. But from the beginning, the hearing aid requirements had the concept that the two directions of the audio stream were independent and could be configured by the application. In other words, the stream from the phone to the hearing aid, and the return stream from hearing aid to phone would be configured and controlled separately, so that either could be turned on or off. The topology on the right of Figure 2.1 moves beyond anything that the Advanced Audio Distribution Profile (A2DP) or HFP can do. Here the phone sends a separate left and right audio stream to left and right hearing aids and then adds the complexity of optional return streams from each of the hearing aid microphones. That introduces a second step beyond anything that Bluetooth Classic Audio profiles can manage, requiring separate synchronised streams to two independent audio devices.

![]()

FEATURED DOWNLOAD

Introducing Bluetooth® LE Audio

Download your free digital copy and find out how Bluetooth LE Audio will change the way we design and use audio.

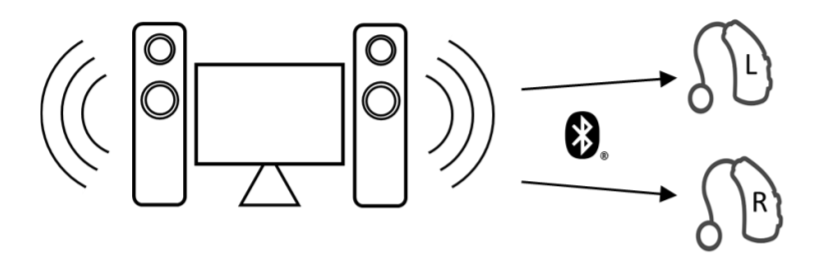

2.1.1.2 Low Latency Audio From a TV

An interesting extension of the requirement arises from the fact that hearing aids may continue to receive ambient sound as well as the Bluetooth® audio stream. Many hearing aids do not occlude the ear (occlude is the industry term for blocking the ear, like an earplug), which means that the wearer always hears a mix of ambient and amplified sound. As the processing delay within a hearing aid is minimal – less than a few milliseconds, this doesn’t present a problem. However, it becomes a problem in a situation like that of Figure 2.2, where some of the wearer’s family is listening to the sound through the TV’s speakers whilst the hearing aid user hears a mix of the ambient sound from the TV as well the same audio stream through their Bluetooth connection.

If the delay between the two audio signals is much more than 30 – 40 milliseconds, it begins to add echo, making the sound more difficult to interpret, which is the opposite of what a hearing aid should be doing. 30 – 40 milliseconds is a much tighter latency than most existing A2DP solutions can provide, so this introduced a new requirement of very low latency.

Although the bandwidth requirements for hearing aids are relatively modest, with a bandwidth of 7kHz sufficient for mono speech and 11kHz for stereo music, these could not be easily met with the existing Bluetooth codecs whilst achieving that latency requirement. That led to a separate investigation to scope the performance requirements for a suitable codec, leading to the incorporation of the LC3 codec, which we’ll cover in Chapter 5.

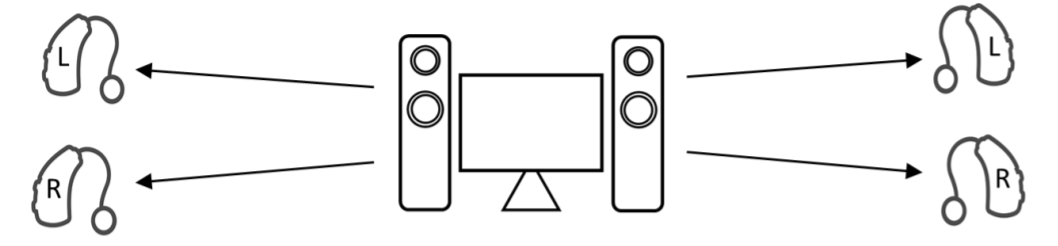

2.1.1.3 Adding More Users

Hearing loss may run in families, and is often linked with age, so it’s common for there to be more than one person in a household who wears hearing aids. Therefore, the new topology needed to support multiple hearing aid wearers. Figure 2.3 illustrates that use case for two people, both of whom should experience the same latency.

2.1.1.4 Adding More Listeners to Support Larger Areas

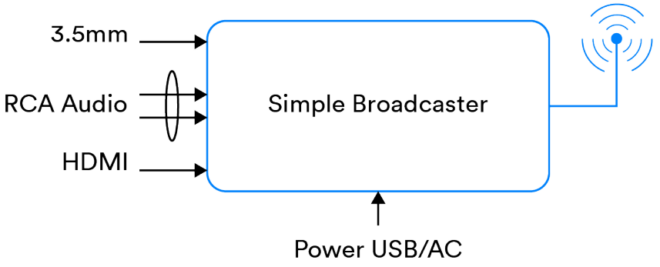

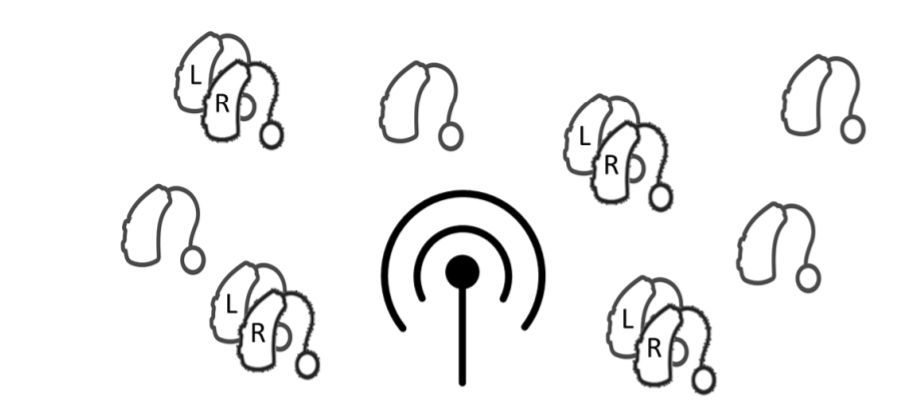

The topology should also be scalable, so that multiple people can listen, as in a classroom or care home. That requirement lies on a spectrum which extends to the provision of a broadcast replacement for the current telecoil induction loops. This required a Bluetooth® broadcast transmitter which could broadcast mono or stereo audio streams capable of being received by any number of hearing aids which are within range, as shown in Figure 2.4.

Figure 2.4 also recognises the fact that some people have hearing loss in only one ear, whereas others have hearing loss in both, (which may often be different levels of hearing loss). That means that it should be possible to broadcast stereo signals at the same time as mono signals. It also highlights the fact that a user may wear hearing aids from two different companies to cope with those differences, or a hearing aid in one ear and a consumer earbud in the other.

2.1.1.5 Coordinating Left and Right Hearing Aids

Whatever the combination, it should be possible to treat a pair of hearing aids as a single set of devices, so that both connect to the same audio source and common features like volume control work on both of them in a consistent manner. This introduced the concept of coordination, where different devices which may come from different manufacturers would accept control commands at the same time and interpret them in the same way.

2.1.1.6 Help With Finding Broadcasts and Encryption

With telecoil, users have only one option to obtain a signal – turn their telecoil receiver on, which picks up audio from the induction loop that surrounds them, or turn it off. Only one telecoil signal can be present in an area, so you don’t have to choose which signal you want. On the other hand, that means you can’t do things like broadcast multiple languages at the same time.

With Bluetooth, multiple broadcast transmitters can operate in the same area. That has obvious advantages, but introduces two new problems – how do you pick up the right audio stream, and how do you prevent others from listening in to a private conversation?

To help choose the correct stream, it’s important that users can find out information about what they are, so they can jump straight to their preferred choice. The richness of that experience will obviously differ depending upon how the search for the broadcast streams is implemented, either on the hearing aid, or on a phone or remote control, but the specification needs to cover all of those possibilities. Many public broadcasts wouldn’t need to be private as they reinforce public audio announcements, but others would. In environments like the home, you wouldn’t want to pick up your neighbour’s TV. Therefore, it is important that the audio streams can be encrypted, requiring the ability to distribute encryption keys to authorised users. That process has to be secure, but easy to do.

As well as the low latency, emulating current hearing aid usage added some other constraints. Where the user wears two hearing aids, regardless of whether they are receiving the same mono, or stereo audio streams, they need to render the audio within 25µs of each other to ensure that the audio image stays stable. That’s equally true for stereo earbuds, but is challenging when the left and right devices may come from different manufacturers.

2.1.1.7 Practical Requirements

Hearing aids are very small, which means they have very limited space for buttons. They are worn by all ages, but some older wearers have limited manual dexterity, so it’s important that controls for adjusting volume and making connections can be implemented on other devices, which are easier to use. That may be the audio source, typically the user’s phone, but it’s common for hearing aid users to have small keyfob like remote controls as well. These have the advantage that they work instantly. If you want to reduce the volume of your hearing aid, you just press the volume or mute button; you don’t need to enable the phone, find the hearing aid app and control it from there. That can take too long and is not a user experience that most hearing aid users appreciate. They need a volume and mute control method which is quick and convenient, otherwise they’ll take their hearing aid out of their ear, which is not the desired behaviour.

There is another hearing aid requirement around volume, which is that the volume level (actually the gain) should be implemented on the hearing aid. The rationale for this is if the audio streams are transmitted at line level2 you get the maximum dynamic range to work with. For a hearing aid, which is processing the sound, it is important that the incoming signal provides the best possible signal to noise ratio, particularly if it is being mixed with an audio stream from ambient microphones. If the gain of the audio is reduced at the source, it results in a lower signal to noise ratio.

An important difference between hearing aids and earbuds or headphones is that hearing aids are worn most of the time and are constantly active, amplifying and adapting the ambient sound to help the wearer hear more clearly. Users don’t regularly take them off and pop them back in a charging case. The typical time a pair of hearing aids is worn each day is around nine and a half hours, although some users may wear them for fifteen hours or more. That’s very different from earbuds and headphones, which are only worn when the user is about to make or take a phone call, or listen to audio. Earbud manufacturers have been very clever with the design of their charging cases to encourage users to regularly recharge their earbuds during the course of a day, giving the impression of a much greater battery life. Hearing aids don’t have that option, so designers need to do everything they can to minimise power consumption.

One of the things that takes up power is looking for other devices and maintaining background connections. Earbuds get clear signals about when to do this – it’s when they’re taken out of the charging box. Most also contain optical sensors to detect when they are in the ear, so they can go back to sleep if they’re on your desk. Hearing aids don’t get the same, unambiguous signal to start a Bluetooth connection as they’re always on, constantly working as hearing aids. That implies that they need to maintain ongoing Bluetooth connections with other devices while they’re waiting for something to happen. These can be low duty-cycle connections, but not too low, otherwise the hearing aid might miss an incoming call, or take too long to respond to a music streaming app being started. Because a hearing aid may connect to multiple different devices, for example a TV, a phone, or even a doorbell, connections like this would drain too much power, so there was a requirement for a new mechanism to allow them to make fast connections with a range of different products, without killing the battery life.

![]()

FEATURED DOWNLOAD

Introducing Bluetooth® LE Audio

Download your free digital copy and find out how Bluetooth LE Audio will change the way we design and use audio.

2.1.2 Core Requirements to Support the Hearing Aid Use Cases

Having defined the requirements for topology and connections, it became obvious that a significant number of new features needed to be added to the Core specification to support them. This led to a second round of work to determine how best to meet the hearing aid requirements in the Core.

The first part of the process was an analysis of whether the new features could be supported by extending the existing Bluetooth® audio specifications rather than introducing a new audio streaming capability into Bluetooth Low Energy. If that were possible, it would have provided backwards compatibility with current audio profiles. The conclusion, similar to an analysis that had been performed when Bluetooth LE was first developed, was that it would involve too many compromises and that it would be better to do a “clean sheet” design on top of the Core 4.1 Low Energy specification.

The proposal for the Core was to implement a new feature called Isochronous Channels which could carry audio streams in Bluetooth LE, alongside an existing ACL channel. The ACL channel would be used to configure, set up and control the streams, as well as carrying more generic control information, such as volume, telephony and media control. The Isochronous Channels could support unidirectional or bidirectional Audio Streams, and multiple Isochronous Channels could be set up with multiple devices. This separated out the audio data and control planes, which makes Bluetooth LE Audio far more flexible.

It was important that the audio connections were robust, which meant they needed to support multiple retransmissions, to cope with the fact that some transmissions might suffer from interference. For unicast streams, there is an ACK/NACK acknowledgement scheme, so that retransmissions could stop once the transmitter knew that data had been received. For broadcast, where there is no feedback, the source would need to unconditionally retransmit the audio packets. During the investigation of robustness, it became apparent that the frequency hopping scheme used to protect LE devices against interference could be improved, so that was added as another requirement.

Broadcast required some new concepts, particularly in terms of how devices could find a broadcast without the presence of a connection. Bluetooth LE uses advertisements to let devices announce their presence. Devices wanting to make a connection scan for these advertisements, then connect to the device they discover to obtain the details of what it supports, how to connect – including information on when it’s transmitting, what its hopping sequence is and what it does. With the requirements of Bluetooth LE Audio, that requires a lot more information than can be fitted into a normal Bluetooth LE advertisement. To overcome this limitation, the Core added a new feature of Extended Advertisements (EA) and Periodic Advertising trains (PA) which allow this information to be carried in data packets on general data channels which are not normally used for advertising. To accompany this, it added new procedures for a receiving device to use this information to determine where the broadcast audio streams were located and synchronise to them.

The requirement that an external device can help find a Broadcast stream added a requirement that it could then inform the receiver of how to connect to that stream – essentially an ability for the receiver to ask for directions from a remote control and be told where to go. That’s accomplished by a Core feature called PAST – Periodic Advertising Synchronisation Transfer, which is key to making broadcast acquisition simple. PAST is a really useful feature for hearing aids, as scanning takes a lot of power. Minimizing scanning in is a useful feature to help prolong the battery life of a hearing aid.

The hearing aid requirements also resulted in a few other features being added to the core requirements, primarily around performance and power saving. The first was an ability for the new codec to be implemented in the Host or in the Controller. The latter makes it easier for hardware implementations, which are generally more power efficient. The second was to put constraints on the maximum time a transmission or reception needed to last, which impacted the design of the packet structure within Isochronous Channels. The reason behind this is that many hearing aids use primary, zinc-air batteries, because of their high power density. However, this battery chemistry relies on limiting current spikes and high-power current draw. Failing to observe these restrictions results in a very significant reduction of battery life. Meeting them shaped the overall design of the Isochronous Channels.

Two final additions to the Core requirements, which came in fairly late in the development, were the introduction of the Isochronous Adaptation Layer (ISOAL) and the Enhanced Attribute Protocol (EATT).

ISOAL allows devices to convert Service Data Units (SDUs) from the upper layer to differently sized Protocol Data Units (PDUs) at the Link Layer and vice versa. The reason this is needed is to cope with devices which may be using the recommended timing settings for the new LC3 codec, which is optimised for 10ms frames, alongside connections to older Bluetooth devices which run at a 7.5ms timing interval.

EATT is an enhancement to the standard Attribute Protocol (ATT) of Bluetooth LE to allow multiple instances of the ATT protocol to run concurrently.

The Extended Advertising features were adopted in the Core 5.1 release, with Isochronous Channels, EATT and ISOAL in the more recent Core 5.2 release, paving the way for all of the other Bluetooth LE Audio specifications to be built on top of them.

2.1.3 Doing Everything That HFP and A2DP Can Do

As the consumer electronics industry began to recognise the potential of the Bluetooth® LE Audio features, which addressed many of the problems they had identified over the years, they made a pragmatic request for a third round of requirements, to ensure that Bluetooth® LE Audio would be able to do everything that A2DP and HFP could do. They made the point that nobody would want to use Bluetooth LE Audio instead of Bluetooth Classic Audio if the user experience was worse.

These requirements increased the performance requirements on the new codec and introduced a far more complex set of requirements for media and telephony control. The original hearing aid requirements included quite limited control functionality for interactions with phones, assuming that most users would directly control the more complex features on their phone or TV, not least because hearing aids have such a limited user interface. Many consumer audio products are larger, so don’t have that limitation. As a result, new telephony and media control requirements were added to allow much more sophisticated control.

2.1.4 Evolving Beyond HFP and A2DP

The fourth round of requirements was a reflection that audio and telephony applications have outpaced HFP and A2DP. Many calls today are VoIP and it’s common to have multiple different calls arriving on a single device – whether that’s a laptop, tablet or phone. Bluetooth® technology needed a better way of handling calls from multiple different bearers. Similarly, A2DP hadn’t anticipated streaming, and the search requirements that come with it, as it was written at a time when users owned local copies of music and rarely did anything more complex than selecting local files. Today, products needed much more sophisticated media control. They also needed to be able to support voice commands without interrupting a music stream.

The complexity in today’s phone and conferencing apps where users handle multiple types of call, along with audio streaming, means that they make more frequent transitions between devices and applications. The inherent difference in architecture between HFP and A2DP has always made that difficult, resulting in a set of best practice rules which make up the MultiProfile specification for HFP and A2DP. The new Bluetooth LE Audio architecture was going to have to go beyond that and incorporate multi-profile support by design, with robust and interoperable transitions between devices and applications, as well as between unicast and broadcast.

As more people in the consumer space started to understand how telecoil and the broadcast features of hearing aids worked, they began to realise that broadcast might have some very interesting mass consumer applications. At the top of their list was the realisation that it could be used for sharing music. That could be friends sharing music from their phones, silent discos or “silent” background music in coffee shops and public spaces. Public broadcast installations, such as those designed to provide travel information for hearing aid wearers, would now be accessible to everyone with a Bluetooth headset. The concept of Audio Sharing, which we’ll examine in more detail in Chapter 12, was born.

Potential new use cases started to proliferate. If we could synchronise stereo channels for two earbuds, why not for surround sound? Companies were keen to make sure that it supported smart watches and wristbands, which could act as remote controls, or even be audio sources with embedded MP3 players. The low latencies were exciting for the gaming community. Microwave ovens could tell you when your dinner’s cooked (you can tell that idea came from an engineer). The number of use cases continued to grow as companies saw how it could benefit their customers, their product strategies and affect the future use of voice and music.

The number of features has meant it has taken a long time to complete the specification. What is gratifying is that most of the new use cases which have been raised in the last few years have not needed us to go back and reopen the specifications – we’ve found that they were already supported by the features that had been defined. That suggests the Bluetooth LE Audio standards have been well designed and are able to support the audio applications we have today as well as new audio applications which are yet to come.

Download the complete book for free.

![]()

FEATURED DOWNLOAD

Introducing Bluetooth® LE Audio

Download your free digital copy and find out how LE Audio will change the way we design and use audio.

![packetcraft logo tagline[1]](https://www.bluetooth.com/wp-content/uploads/2024/03/packetcraft_logo_tagline1.png)

![2312 CES Handout Images FINAL existing pdf 464x600[1]](https://www.bluetooth.com/wp-content/uploads/2024/01/2312_CES_Handout-Images_FINAL-existing-pdf-464x6001-1.jpg)

![2312 CES Handout Images FINAL unlimited pdf 464x600[1]](https://www.bluetooth.com/wp-content/uploads/2024/01/2312_CES_Handout-Images_FINAL-unlimited-pdf-464x6001-1.jpg)