Revision: v1.0.1

Revision Date: 2022-06-21

Prepared By: Generic Audio Working Group

Abstract:

This profile defines how devices can distribute and/or consume audio using Bluetooth Low Energy (LE) wireless communications.

Revision History

|

Revision Number |

Date |

Comments |

|---|---|---|

|

v1.0 |

2021-09-14 |

Adopted by the Bluetooth SIG Board of Directors. |

|

v1.0.1 |

2022-06-21 |

Adopted by the Bluetooth SIG Board of Directors. |

Version History

|

Versions |

Changes |

|---|---|

|

v1.0 to v1.0.1 |

Incorporated errata E17650, E17667, E17674, E17696, E17821, E17835, E17836, E17837, E17949, E18156, E18205, E18524, E18556, E18652, E18768, E18771, E18777. |

Acknowledgments

|

Name |

Company |

|---|---|

|

Jonathan Tanner |

Qualcomm Technologies International, Ltd |

|

Chris Church |

Qualcomm Technologies International, Ltd |

|

Robin Heydon |

Qualcomm Technologies International, Ltd |

|

Nick Hunn |

GN Hearing A/S |

|

Søren Larsen |

Widex |

|

Markus Schnell |

Fraunhofer IIS |

|

Jeff Solum |

Starkey |

|

Masahiko Seki |

Sony |

|

Andrew Estrada |

Sony |

|

Stephan Gehring |

Sonova AG |

|

Michael Ungstrup |

Widex A/S |

|

Simon Jonghun Song |

LG Electronics, Inc. |

|

HJ Lee |

LG Electronics, Inc. |

|

Bjarne Klemmensen |

Oticon A/S |

|

Kanji Kerai |

Widex A/S |

|

Erwin Weinans |

Plantronics Inc. |

|

Scott Walsh |

Plantronics Inc. |

|

Georg Dickmann |

Sonova AG |

|

Peter Liu |

Bose Corporation |

|

Daniel Sisolak |

Bose Corporation |

|

Rasmus Abildgren |

Bose Corporation |

|

Xuemei Ouyang |

Intel Corporation |

|

Oren Haggai |

Intel Corporation |

|

Chethan Narayan Tumkur |

Intel Corporation |

|

Siegfried Lehmann |

Apple |

|

Riccardo Cavallari |

Sivantos GmbH |

|

Marcel Holtmann |

Intel Corporation |

|

Sam Geeraerts |

NXP Semiconductors |

|

Anil Kumar Vutukuru |

MindTree Limited |

|

Luiz Von Dentz |

Intel Corporation |

|

Himanshu Bhalla |

Intel Corporation |

|

Andrew Credland |

Samsung Electronics Co., Ltd |

|

Khaled Elsayed |

Synopsys |

|

Michael Rougeux |

Bose Corporation |

|

Tim Reilly |

Bose Corporation |

|

Ella Chu |

Microchip |

|

Charlie Lee |

Microchip |

|

Asbjørn Sæbø |

Nordic Semiconductor ASA |

|

David Hughes |

Broadcom |

|

Sherry Smith |

Broadcom |

|

Łukasz Rymanowski |

Codecoup |

|

Grzegorz Kołodziejczyk |

Codecoup |

|

Morteza Rahchamani |

Arm |

|

Frank Yerrace |

Microsoft |

|

Dong Jianli |

Oppo |

|

Yao Wang |

Barrot |

|

Erik Peterson |

Microsoft |

Use of this specification is your acknowledgement that you agree to and will comply with the following notices and disclaimers. You are advised to seek appropriate legal, engineering, and other professional advice regarding the use, interpretation, and effect of this specification.

Use of Bluetooth specifications by members of Bluetooth SIG is governed by the membership and other related agreements between Bluetooth SIG and its members, including those agreements posted on Bluetooth SIG’s website located at www.bluetooth.com. Any use of this specification by a member that is not in compliance with the applicable membership and other related agreements is prohibited and, among other things, may result in (i) termination of the applicable agreements and (ii) liability for infringement of the intellectual property rights of Bluetooth SIG and its members. This specification may provide options, because, for example, some products do not implement every portion of the specification. All content within the specification, including notes, appendices, figures, tables, message sequence charts, examples, sample data, and each option identified is intended to be within the bounds of the Scope as defined in the Bluetooth Patent/Copyright License Agreement (“PCLA”). Also, the identification of options for implementing a portion of the specification is intended to provide design flexibility without establishing, for purposes of the PCLA, that any of these options is a “technically reasonable non-infringing alternative.”

Use of this specification by anyone who is not a member of Bluetooth SIG is prohibited and is an infringement of the intellectual property rights of Bluetooth SIG and its members. The furnishing of this specification does not grant any license to any intellectual property of Bluetooth SIG or its members. THIS SPECIFICATION IS PROVIDED “AS IS” AND BLUETOOTH SIG, ITS MEMBERS AND THEIR AFFILIATES MAKE NO REPRESENTATIONS OR WARRANTIES AND DISCLAIM ALL WARRANTIES, EXPRESS OR IMPLIED, INCLUDING ANY WARRANTIES OF MERCHANTABILITY, TITLE, NON-INFRINGEMENT, FITNESS FOR ANY PARTICULAR PURPOSE, OR THAT THE CONTENT OF THIS SPECIFICATION IS FREE OF ERRORS. For the avoidance of doubt, Bluetooth SIG has not made any search or investigation as to third parties that may claim rights in or to any specifications or any intellectual property that may be required to implement any specifications and it disclaims any obligation or duty to do so.

TO THE MAXIMUM EXTENT PERMITTED BY APPLICABLE LAW, BLUETOOTH SIG, ITS MEMBERS AND THEIR AFFILIATES DISCLAIM ALL LIABILITY ARISING OUT OF OR RELATING TO USE OF THIS SPECIFICATION AND ANY INFORMATION CONTAINED IN THIS SPECIFICATION, INCLUDING LOST REVENUE, PROFITS, DATA OR PROGRAMS, OR BUSINESS INTERRUPTION, OR FOR SPECIAL, INDIRECT, CONSEQUENTIAL, INCIDENTAL OR PUNITIVE DAMAGES, HOWEVER CAUSED AND REGARDLESS OF THE THEORY OF LIABILITY, AND EVEN IF BLUETOOTH SIG, ITS MEMBERS OR THEIR AFFILIATES HAVE BEEN ADVISED OF THE POSSIBILITY OF THE DAMAGES.

Products equipped with Bluetooth wireless technology ("Bluetooth Products") and their combination, operation, use, implementation, and distribution may be subject to regulatory controls under the laws and regulations of numerous countries that regulate products that use wireless non-licensed spectrum. Examples include airline regulations, telecommunications regulations, technology transfer controls, and health and safety regulations. You are solely responsible for complying with all applicable laws and regulations and for obtaining any and all required authorizations, permits, or licenses in connection with your use of this specification and development, manufacture, and distribution of Bluetooth Products. Nothing in this specification provides any information or assistance in connection with complying with applicable laws or regulations or obtaining required authorizations, permits, or licenses.

Bluetooth SIG is not required to adopt any specification or portion thereof. If this specification is not the final version adopted by Bluetooth SIG’s Board of Directors, it may not be adopted. Any specification adopted by Bluetooth SIG’s Board of Directors may be withdrawn, replaced, or modified at any time. Bluetooth SIG reserves the right to change or alter final specifications in accordance with its membership and operating agreements.

Copyright © 2017–2022. All copyrights in the Bluetooth Specifications themselves are owned by Apple Inc., Ericsson AB, Intel Corporation, Lenovo (Singapore) Pte. Ltd., Microsoft Corporation, Nokia Corporation, and Toshiba Corporation. The Bluetooth word mark and logos are owned by Bluetooth SIG, Inc. Other third-party brands and names are the property of their respective owners.

1. Introduction

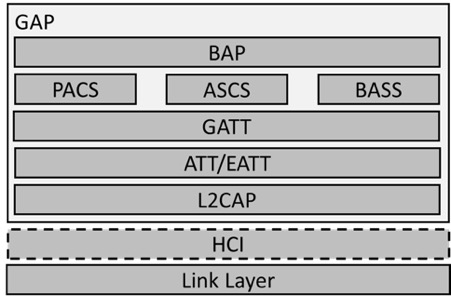

The Basic Audio Profile (BAP) defines procedures for Audio Stream control by using the Generic Attribute Profile (GATT) and the Generic Access Profile (GAP) for devices that want to use Bluetooth Low Energy (LE) in audio-related scenarios (e.g., sending or receiving unicast audio, or sending or receiving broadcast audio).

1.1. Profile dependencies

This profile requires GATT [1], GAP [1], Audio Stream Control Service (ASCS) [4], Published Audio Capabilities Service (PACS) [5], Broadcast Audio Scan Service (BASS) [6], and Link Layer (LL) [1].

1.2. Conformance

If conformance to this specification is claimed, all capabilities indicated as mandatory for this specification shall be supported in the specified manner (process-mandatory). This also applies for all optional and conditional capabilities for which support is indicated.

1.3. Bluetooth Core Specification release compatibility

This specification is compatible with the Bluetooth Core Specification, Version 5.2 [1] or later.

1.4. Language

1.4.1. Language conventions

The Bluetooth SIG has established the following conventions for use of the words shall, must, will, should, may, can, is, and note in the development of specifications:

|

shall |

is required to – used to define requirements. |

|

must |

is used to express: a natural consequence of a previously stated mandatory requirement. OR an indisputable statement of fact (one that is always true regardless of the circumstances). |

|

will |

it is true that – only used in statements of fact. |

|

should |

is recommended that – used to indicate that among several possibilities one is recommended as particularly suitable, but not required. |

|

may |

is permitted to – used to allow options. |

|

can |

is able to – used to relate statements in a causal manner. |

|

is |

is defined as – used to further explain elements that are previously required or allowed. |

|

note |

Used to indicate text that is included for informational purposes only and is not required in order to implement the specification. Each note is clearly designated as a “Note” and set off in a separate paragraph. |

For clarity of the definition of those terms, see Core Specification Volume 1, Part E, Section 1.

1.4.2. Reserved for Future Use

Where a field in a packet, Protocol Data Unit (PDU), or other data structure is described as “Reserved for Future Use” (irrespective of whether in uppercase or lowercase), the device creating the structure shall set its value to zero unless otherwise specified. Any device receiving or interpreting the structure shall ignore that field; in particular, it shall not reject the structure because of the value of the field.

Where a field, parameter, or other variable object can take a range of values, and some values are described as “Reserved for Future Use,” a device sending the object shall not set the object to those values. A device receiving an object with such a value should reject it, and any data structure containing it, as being erroneous; however, this does not apply in a context where the object is described as being ignored or it is specified to ignore unrecognized values.

When a field value is a bit field, unassigned bits can be marked as Reserved for Future Use and shall be set to 0. Implementations that receive a message that contains a Reserved for Future Use bit that is set to 1 shall process the message as if that bit was set to 0, except where specified otherwise.

The acronym RFU is equivalent to Reserved for Future Use.

1.4.3. Prohibited

When a field value is an enumeration, unassigned values can be marked as “Prohibited.” These values shall never be used by an implementation, and any message received that includes a Prohibited value shall be ignored and shall not be processed and shall not be responded to.

Where a field, parameter, or other variable object can take a range of values, and some values are described as “Prohibited,” devices shall not set the object to any of those Prohibited values. A device receiving an object with such a value should reject it, and any data structure containing it, as being erroneous.

“Prohibited” is never abbreviated.

1.5. General interpretation rules

The following rules apply throughout this specification unless they are explicitly overridden.

1.5.1. Binary and hexadecimal numbers

Binary numbers are written with a “0b” prefix, so 0b1101 is the same as the decimal number 13.

Hexadecimal numbers are written with a “0x” prefix, so 0x42 is the same as the decimal number 66. The letters “a” to “f” are used to represent the digits 10 to 15, so 0x1A is the same as the decimal number 26. The use of capital letters or lowercase letters in a hexadecimal number is not significant.

Underscore characters placed between the digits of binary or hexadecimal numbers are intended to make the numbers easier to interpret; these underscores do not affect the value. For example, 0b0010_1011 and 0b00101011 both equal the decimal number 43.

Any number not written in one of the above ways is a decimal.

1.5.1.1. Specification of bit values

Some values in the specification are divided into individual bits, each of which has a description. If explicit bit values are not given in a description of individual bits, that description represents the meaning of the bit when the bit is set to a value of 0b1, and the opposite meaning applies when the bit is set to a value of 0b0.

For example, a description of:

Bit 3: 32,000 Hz supported

means the same as:

Bit 3 = 0b1: 32,000 Hz supported

Bit 3 = 0b0: 32,000 Hz not supported

1.5.2. Arrayed parameters

Arrayed parameters are specified by using the following notation: ParameterA[i].

If more than one set of arrayed parameters is specified (e.g., ParameterA[i], ParameterB[i]), then, unless noted otherwise, the order of the parameters shall be: ParameterA[0], ParameterB[0], ParameterA[1], ParameterB[1], ParameterA[2], ParameterB[2], …ParameterA[n], ParameterB[n].

1.6. Terminology

Table 1.1 defines terms that are needed to understand features used in this profile. This profile also uses terms that are defined in the Bluetooth Core Specification [1], ASCS [4], PACS [5], and BASS [6].

|

Term |

Definition |

|---|---|

|

Additional Controller Advertising Data (ACAD) |

Defined in Volume 6, Part B, Section 2.3.4.8 in the Bluetooth Core Specification [1] |

|

Audio Channel |

A flow of audio data, which might be encoded or not, that can be assigned to a single Audio Location |

|

Audio Location |

The intended logical spatial rendering location of an Audio Channel within a spatial arrangement of loudspeakers or other audio transducers that render audio Audio Location values are defined in Bluetooth Assigned Numbers [2]. |

|

Audio Sink |

Receives unicast audio data from Audio Sources |

|

Audio Source |

Transmits unicast audio data to Audio Sinks |

|

Audio Stream Endpoint (ASE) |

Defined in ASCS [4] |

|

ASE identifier (ASE_ID) |

Defined in [4] |

|

Broadcast Audio Source Endpoint (BASE) |

Part of Basic Audio Announcement defined in Section 3.7.2.2. Contained in the additional service data portion of the Service Data advertising data (AD) data type and transported using AUX_SYNC_IND PDUs. Used to inform scanning devices of codec and other parameters of a Broadcast Isochronous Group (BIG) transporting one or more broadcast Audio Streams. |

|

Broadcast Audio Stream |

A unidirectional, connectionless, logical communication channel that transports broadcast audio data flowing from a Broadcast Source |

|

Broadcast_ID |

Data that can be used by scanning devices to help find broadcast Audio Streams. Contained in the AdvData field of AUX_ADV_IND PDUs transmitted by Broadcast Sources |

|

Broadcast Isochronous Group (BIG) |

Defined in Volume 6, Part B, Section 4.4.6.2 in [1] |

|

Broadcast Isochronous Stream (BIS) |

Defined in Volume 6, Part B, Section 4.4.6.1 in [1] |

|

Broadcast Sink |

Receives broadcast audio data from Broadcast Sources |

|

Broadcast Source |

Transmits broadcast audio data to Broadcast Sinks |

|

CIG Identifier (CIG_ID) |

Defined in Volume 6, Part B, Section 4.5.14 in [1] |

|

CIG_Sync_Delay |

Defined in Volume 6, Part B, Section 4.5.4.1.1 in [1] |

|

CIS Identifier (CIS_ID) |

Defined in Volume 6, Part B, Section 4.5.13.1 in [1] |

|

Connected Isochronous Group (CIG) |

Defined in Volume 6, Part B, Section 4.5.14 in [1] |

|

Connected Isochronous Stream (CIS) |

Defined in Volume 6, Part B, Section 4.5.13 in [1] |

|

Context Type |

Defined in PACS [5] |

|

Cross-Transport Key Derivation (CTKD) |

Defined in Volume 3, Part C, Section 14.1 in [1] |

|

Extended advertising (EA) PDUs |

Defined in Volume 6, Part B, Section 2.3.1 in [1] |

|

Enhanced ATT (EATT) bearer |

An ATT bearer defined in Volume 3, Part F, Section 3.2.11 in [1] that uses the Enhanced Credit Based Flow Control L2CAP channel mode defined in Volume 3, Part A, Section 10.2 in [1] |

|

Generic Access Profile (GAP) |

Defined in Volume 3, Part C in [1] |

|

Low Energy asynchronous connection (LE ACL) |

Defined in Volume 1, Part A, Section 3.5.4.6 in [1] |

|

Link Layer (LL) |

Defined in Volume 6, Part B in [1] |

|

LTV structure |

Length-type value formatted data A single-octet Length field includes the length of the Type and Value fields. The Length field is followed by a single-octet Type field. The Type field is followed by a Value field of length (Length-1) octets. |

|

Metadata |

Data that describes other data. Metadata parameters in this profile and related service specifications consist of LTV structures that provide contextual or other supplementary information. |

|

Packet Loss Concealment (PLC) |

A technique used to mask the effects of lost or discarded packets |

|

periodic advertising train (PA) |

Defined in Volume 6, Part B, Section 4.4.5.1 in [1] |

|

Periodic Advertising Synchronization Transfer (PAST) procedure |

Defined in Volume 3, Part C, Section 9.5.4 in [1] |

|

Presentation Delay |

Timing data intended to assist in the simultaneous rendering of audio data for one or more transducers |

|

Published Audio Capability (PAC) record |

Defined in [5] |

|

Quality of Service (QoS) |

Defined in [4] |

|

Remote Broadcast Scanning |

Scanning, on behalf of a Scan Delegator, for EA that point to a PA |

|

Scan Offloading |

The process of transferring SyncInfo and other data to a Scan Delegator that enables synchronization to a PA |

|

Service Data data type |

Defined in the Bluetooth Core Specification Supplement (CSS) [3] |

|

Service UUID data type |

Defined in the Bluetooth Core Specification Supplement (CSS) [3] |

|

SyncInfo |

Defined in Volume 6, Part B, Section 2.3.4.6 in [1] |

|

Unenhanced ATT bearer |

An ATT bearer defined in Volume 3, Part F, Section 3.2.11 in [1] that does not use the Enhanced Credit Based Flow Control L2CAP channel mode defined in Volume 3, Part A, Section 10.2 in [1] |

|

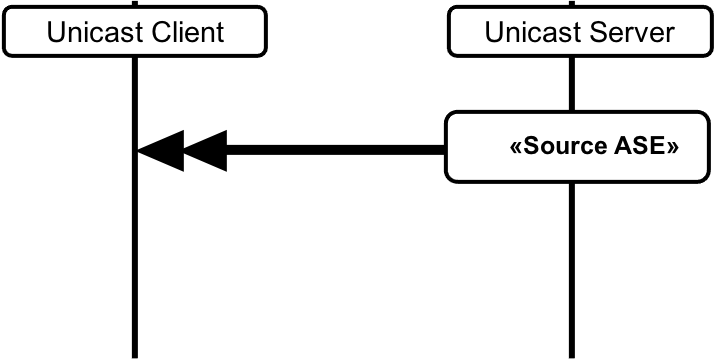

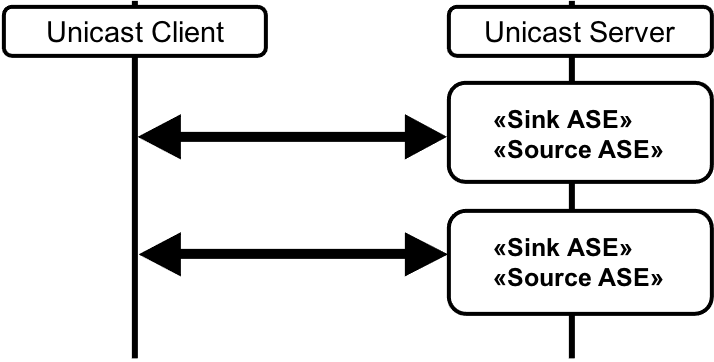

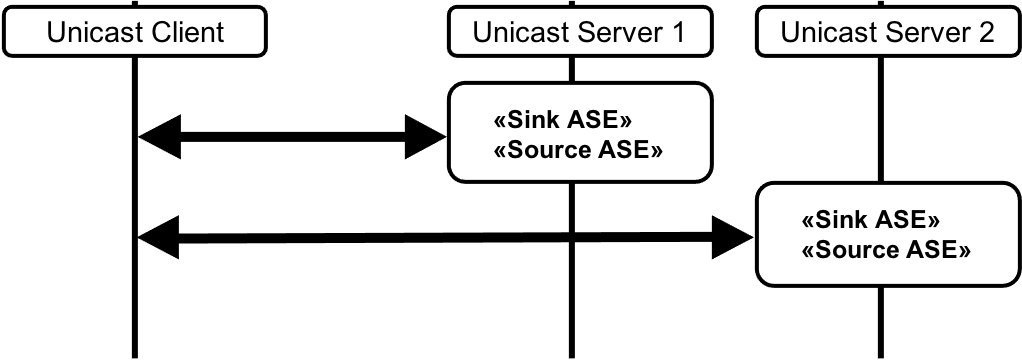

Unicast Audio Stream |

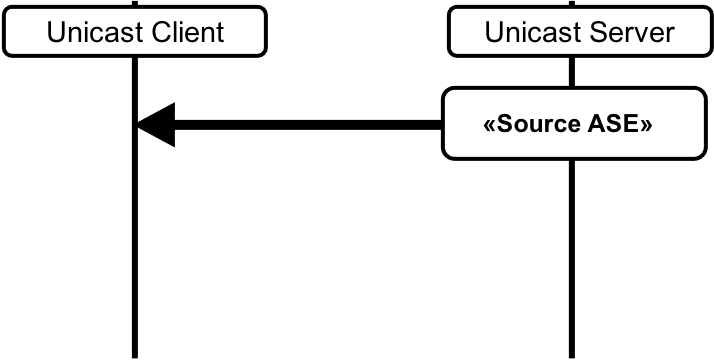

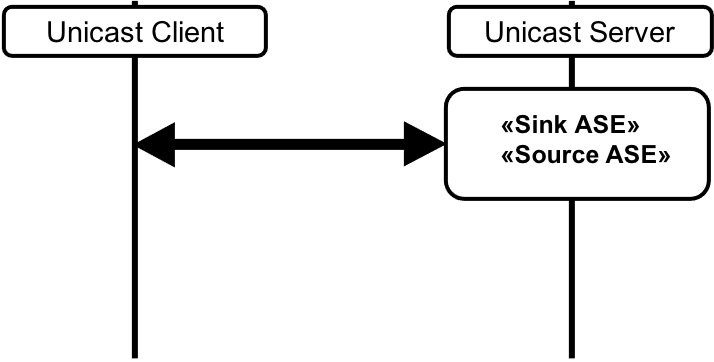

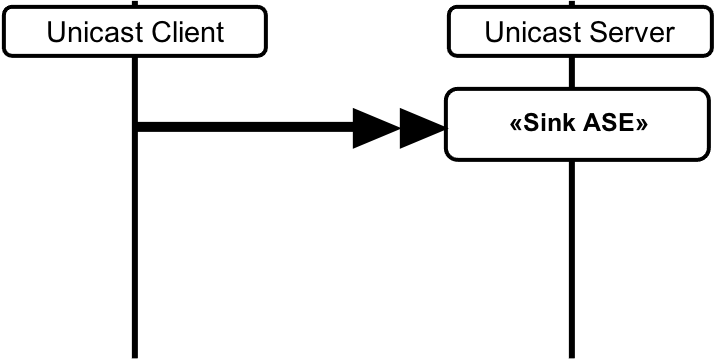

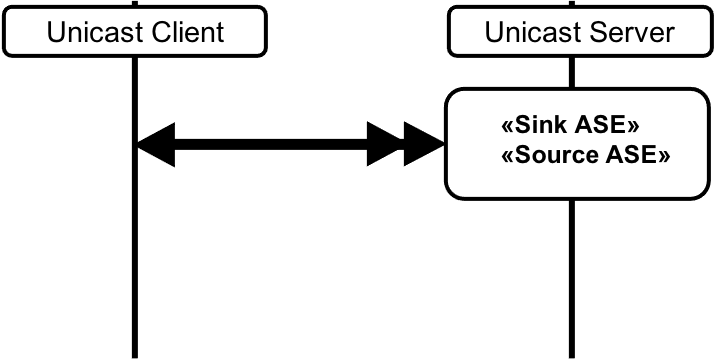

A unidirectional, logical communication channel transporting audio data for one or more Audio Channels from an Audio Source to an Audio Sink For a unicast Audio stream, audio data will either flow towards a Sink ASE exposed on the Unicast Server (server is Audio Sink) or from a Source ASE exposed on the Unicast Server (server is Audio Source). |

2. Configuration

2.1. Profile and protocol stack

Figure 2.1 shows the profile and protocol stack for BAP.

2.2. Profile roles

This profile defines six roles: Unicast Server, Unicast Client, Broadcast Source, Broadcast Sink, Broadcast Assistant, and Scan Delegator.

2.2.1. Unicast roles

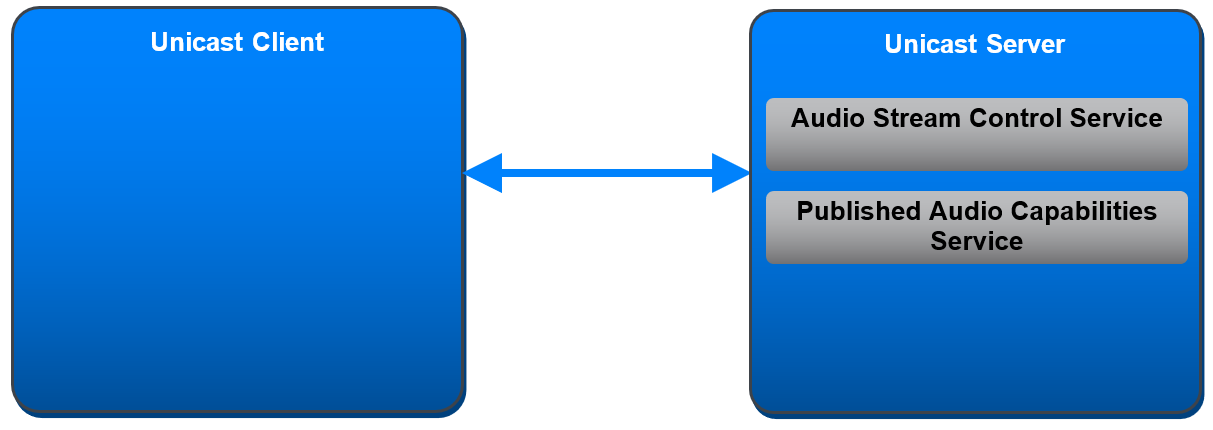

Two BAP roles are used for unicast audio: Unicast Server and Unicast Client.

2.2.1.1. Unicast Server

The Unicast Server transmits advertisements that the Unicast Client uses to discover the Unicast Server and to establish connections to the Unicast Server.

The Unicast Server exposes attributes that the Unicast Client uses to discover the Unicast Server’s supported audio capabilities.

The Unicast Server exposes attributes that the Unicast Client uses to discover, configure, and control ASEs exposed by the Unicast Server.

The Unicast Server exposes its current availability to transmit or receive unicast Audio Streams.

The Unicast Server accepts the establishment of CIGs, which may have one or more CISes, used to transport unicast Audio Streams.

The Unicast Server can terminate CISes.

2.2.1.2. Unicast Client

The Unicast Client scans for advertisements to discover Unicast Servers and establishes connections to Unicast Servers.

The Unicast Client discovers the availability of the Unicast Server to transmit or receive unicast Audio Streams.

The Unicast Client discovers and uses attributes exposed by the Unicast Server to determine the Unicast Server’s audio capabilities and audio role support.

The Unicast Client discovers attributes used to configure and control ASEs exposed by the Unicast Server.

The Unicast Client configures and establishes one or more CIGs, which can have one or more CISes used to transport a unicast Audio Stream.

The Unicast Client can terminate CISes.

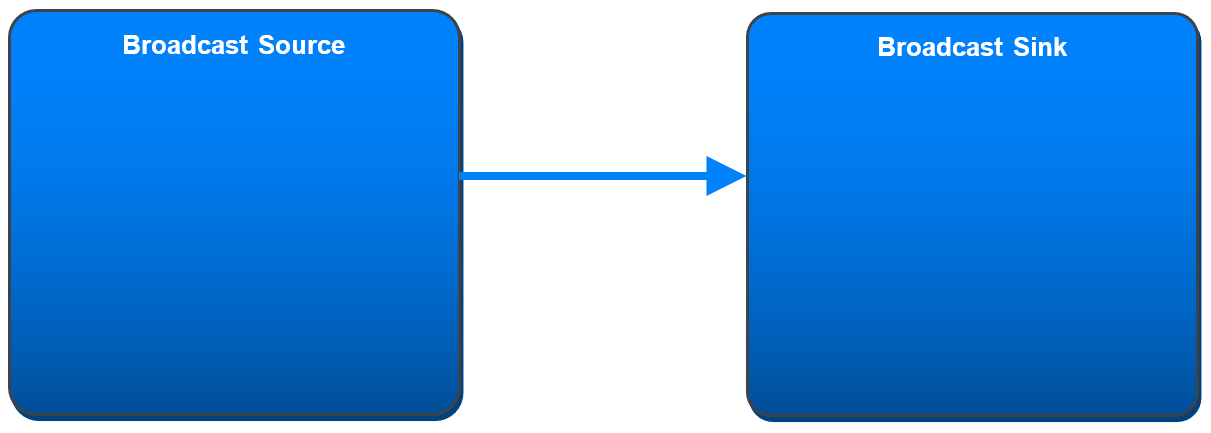

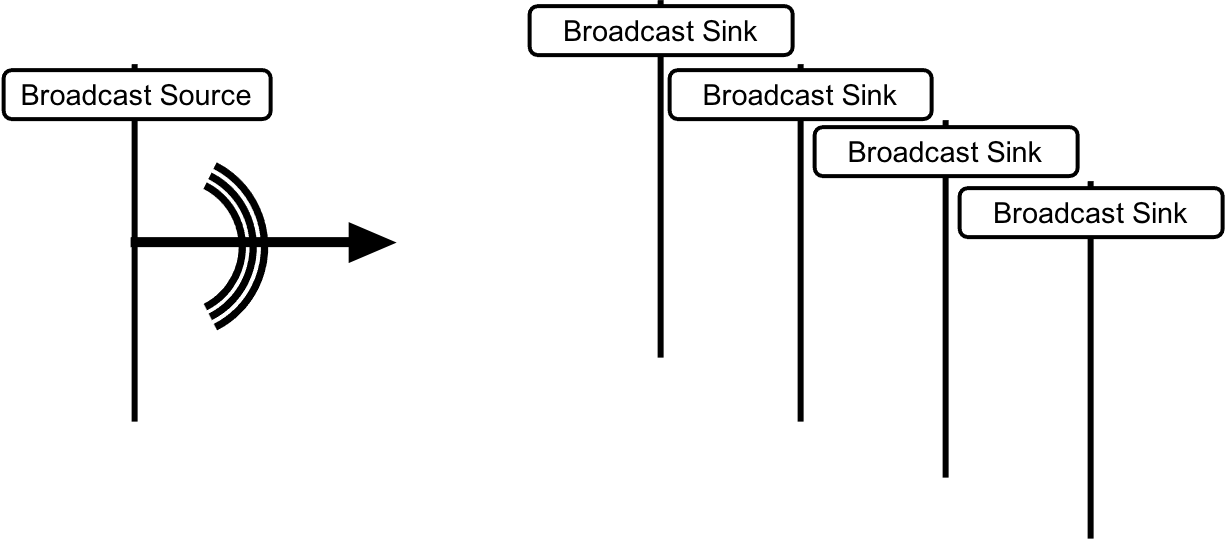

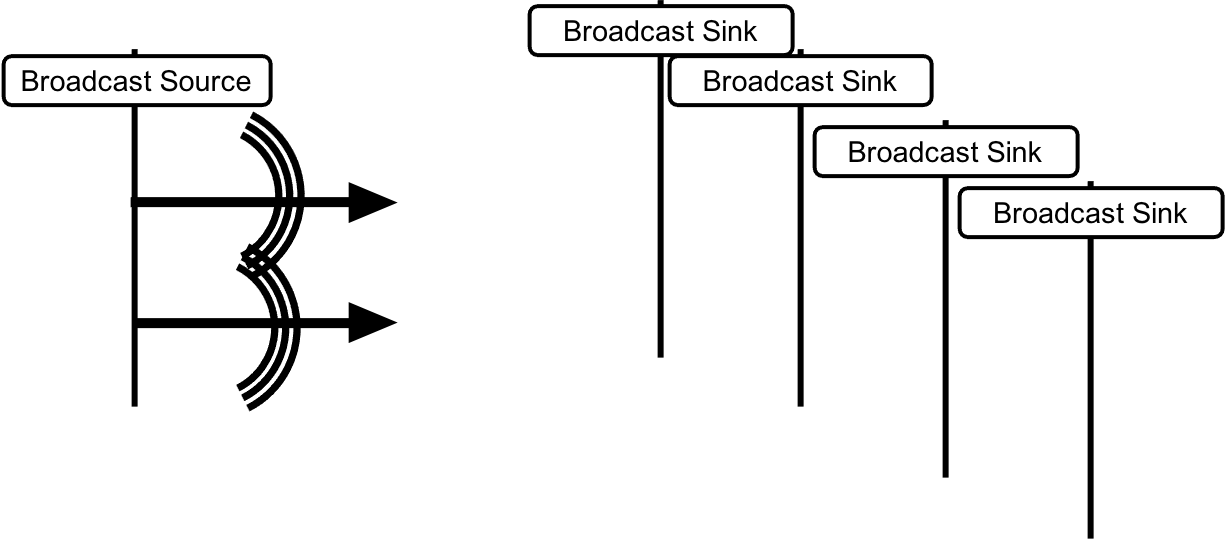

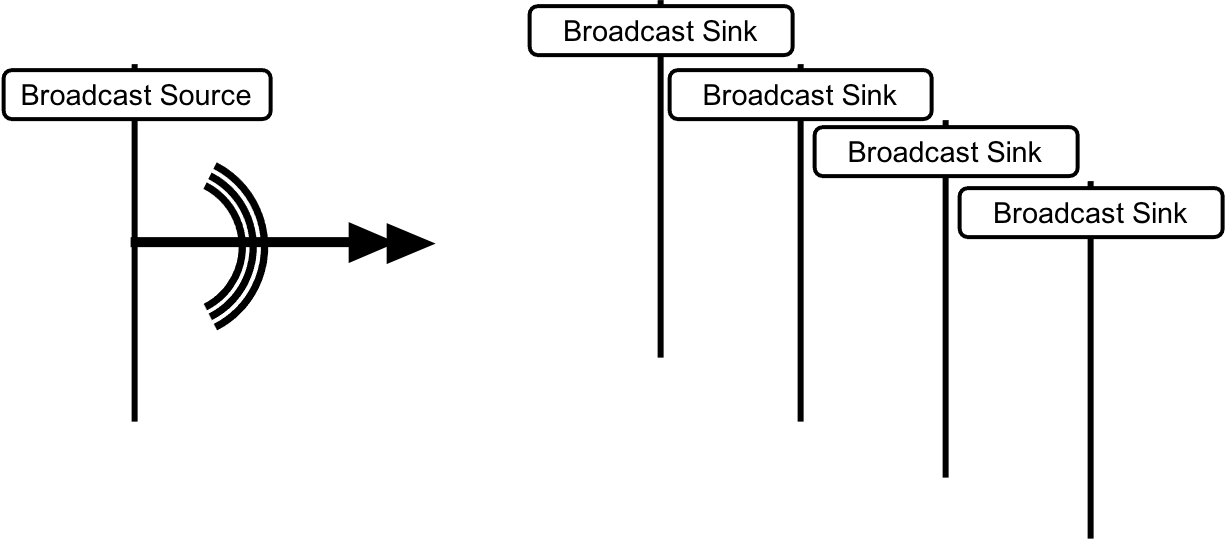

2.2.2. Broadcast roles

Four BAP roles are used for broadcast audio: Broadcast Source, Broadcast Sink, Broadcast Assistant, and Scan Delegator.

2.2.2.1. Broadcast Source

The Broadcast Source configures and establishes one or more BIGs, each containing one or more BISes that are used to transport broadcast Audio Streams.

The Broadcast Source transmits data that describes broadcast Audio Stream configurations.

The Broadcast Source transmits data that enables devices to discover and receive broadcast Audio Streams.

2.2.2.2. Broadcast Sink

The Broadcast Sink discovers data that describes broadcast Audio Stream configurations.

The Broadcast Sink discovers and receives broadcast Audio Streams.

The Broadcast Sink exposes its audio capabilities.

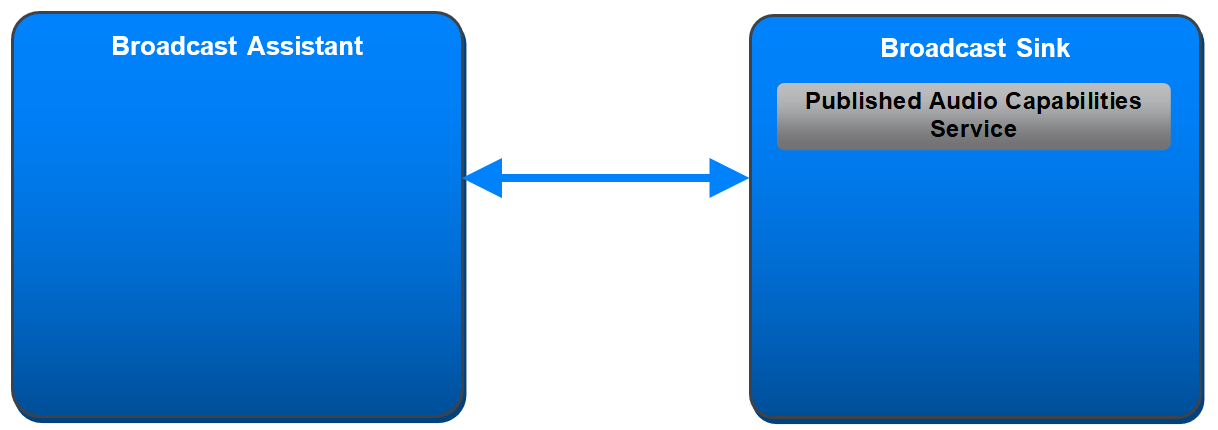

2.2.2.3. Broadcast Assistant

The Broadcast Assistant discovers the audio capabilities of Broadcast Sinks.

The Broadcast Assistant discovers data that enables devices to discover and receive broadcast Audio Streams.

The Broadcast Assistant discovers data that describes broadcast Audio Stream configurations.

The Broadcast Assistant connects to Scan Delegators and transfers data to Scan Delegators that the Broadcast Assistant has scanned on behalf of the Scan Delegators, including Broadcast_Codes necessary to decrypt encrypted broadcast Audio Streams.

The Broadcast Assistant scans for soliciting Scan Delegators.

The Broadcast Assistant requests Scan Delegators to discover data that describes broadcast Audio Streams and can request Scan Delegators collocated with Broadcast Sinks to receive broadcast Audio Streams.

2.2.2.4. Scan Delegator

The Scan Delegator solicits for Broadcast Assistant devices to perform scanning on behalf of the Scan Delegator.

The Scan Delegator receives transfers of the data that Broadcast Assistants have scanned on behalf of the Scan Delegator, including Broadcast_Codes necessary to decrypt encrypted broadcast Audio Streams.

2.3. Profile role and service relationships

The following profile role and service relationships apply:

-

The Unicast Server shall be a GATT Server.

-

The Unicast Client shall be a GATT Client.

-

The Broadcast Source has no GATT role requirement.

-

The Broadcast Sink shall be a GATT Server.

-

The Broadcast Assistant shall be a GATT Client.

-

The Scan Delegator shall be a GATT Server.

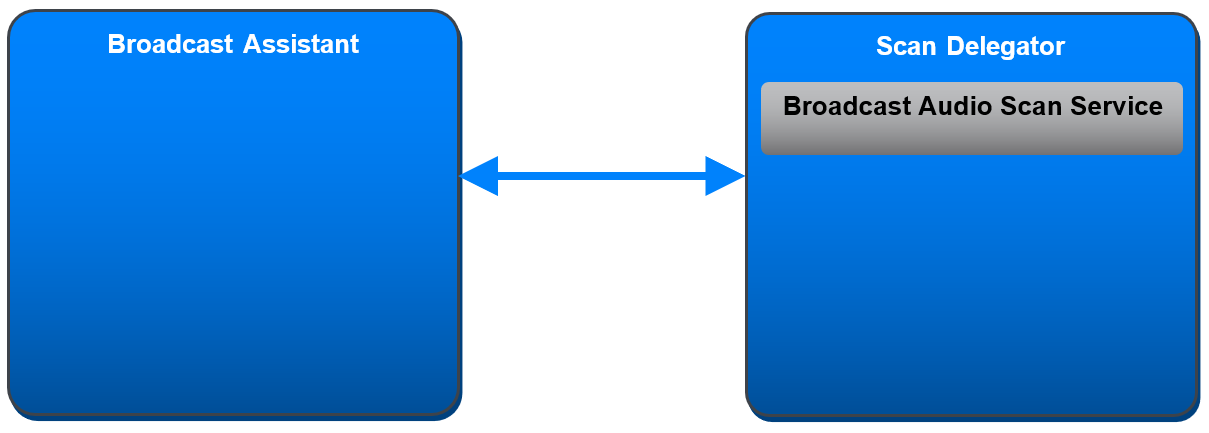

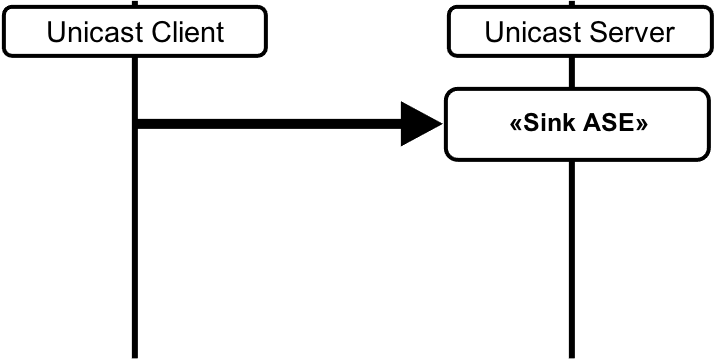

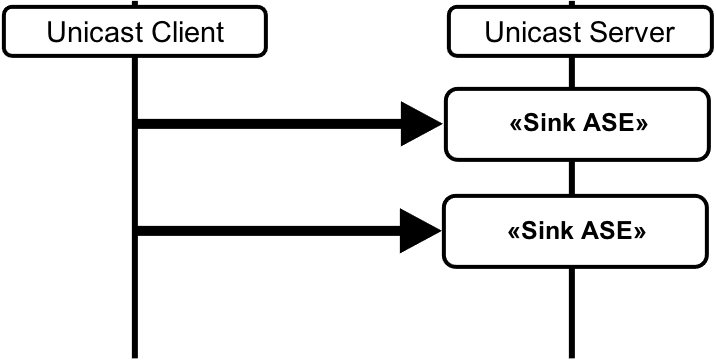

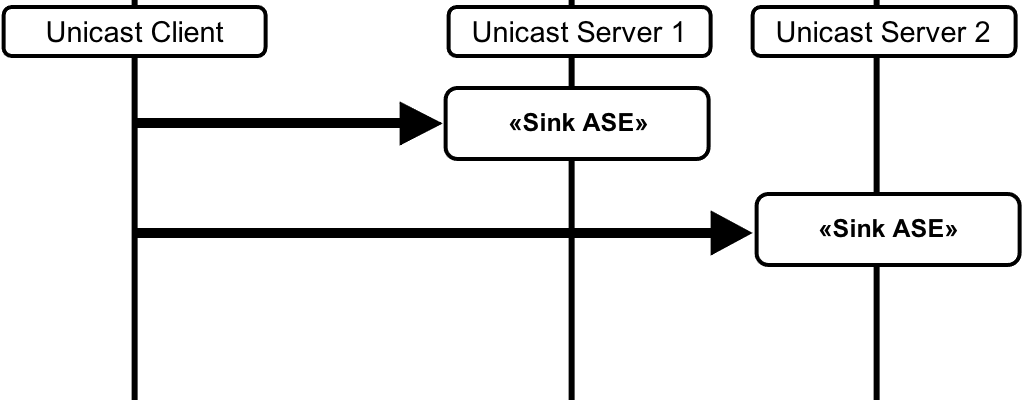

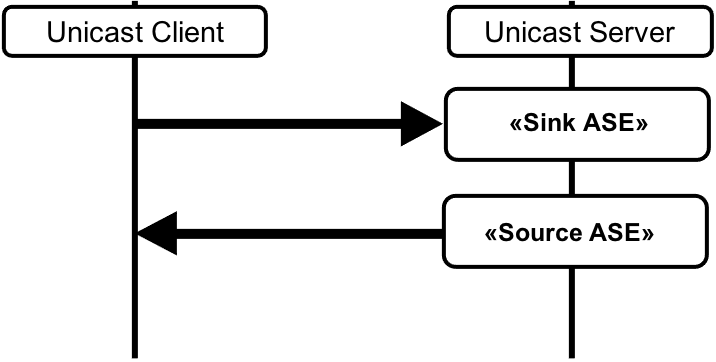

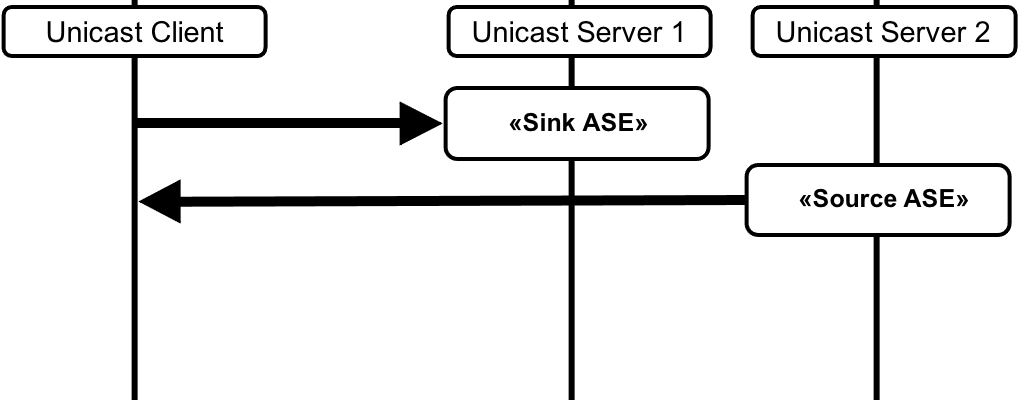

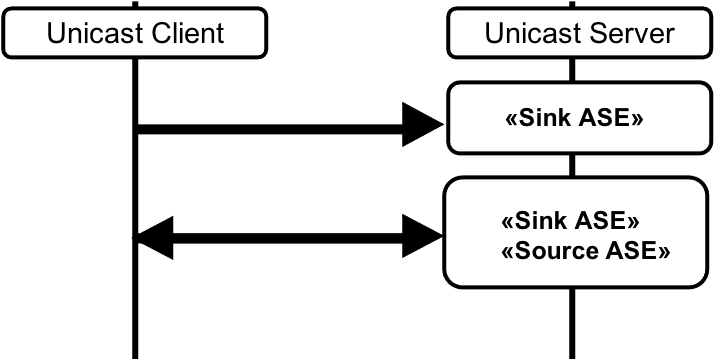

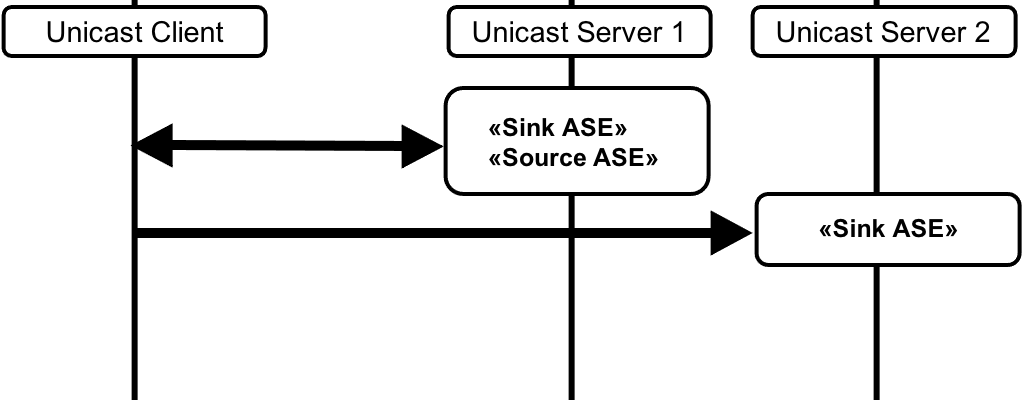

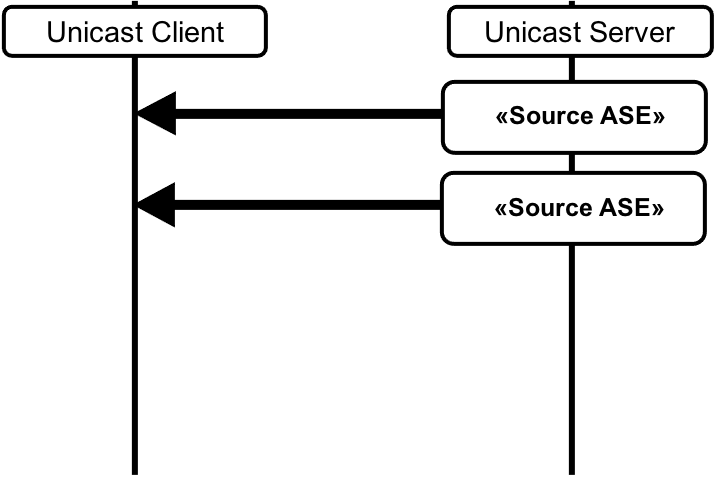

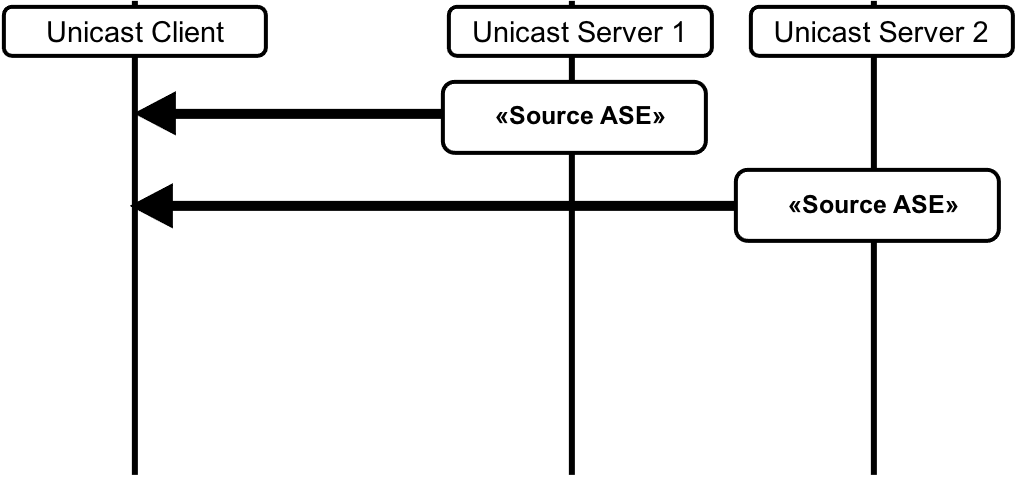

Figure 2.2, Figure 2.3, Figure 2.4, and Figure 2.5 show examples of the relationships between the various profile roles (blue boxes) and services (gray boxes).

2.4. Concurrency limitations and restrictions

A device shall not concurrently occupy the Unicast Client role and Unicast Server role in a connection to a single device because the Unicast Client occupies the LL Central role, and both devices cannot occupy the LL Central role in the same connection, as defined in Volume 6, Part B, Section 1.1.1 in [1].

All other combinations of profile roles may be occupied concurrently by a device. A combination of BAP profile roles on a GATT Server shall have no more than one GATT service defined for the individual profile roles.

2.5. Topology limitations and restrictions

GAP roles are described in Volume 3, Part C, Section 2.2.2 in [1].

The Unicast Client shall use the GAP Central role.

The Unicast Server shall use the GAP Peripheral role.

The Broadcast Source shall use the GAP Broadcaster role.

The Broadcast Sink shall use either the GAP Observer role or the GAP Central role when scanning for periodic advertising data that enables devices to discover and receive broadcast Audio Streams and that describes broadcast Audio Stream configuration.

The Broadcast Sink shall use the GAP Observer role when receiving broadcast Audio Streams.

The Broadcast Sink shall use the GAP Peripheral role when exposing its audio capabilities and its availability to receive broadcast Audio Streams.

The Broadcast Assistant shall use either the GAP Observer role or the GAP Central role when scanning for periodic advertising data that enables devices to discover and receive broadcast Audio Streams and that describes broadcast Audio Stream configuration.

The Broadcast Assistant shall use the GAP Central role when discovering connectable Scan Delegators.

The Broadcast Assistant shall use the GAP Central role when establishing connections to Scan Delegators.

The Broadcast Assistant shall use either the GAP Central role or the GAP Peripheral role when transferring data to the Scan Delegator that the Broadcast Assistant has scanned on behalf of the Scan Delegator.

The Broadcast Assistant shall use the GAP Central role or the GAP Peripheral role when determining Broadcast Sink audio capabilities.

The Scan Delegator shall use the GAP Peripheral role when soliciting for Broadcast Assistants to scan on behalf of the Scan Delegator.

The Scan Delegator shall use either the GAP Central role or the GAP Peripheral role when receiving transfers of the data that Broadcast Assistants have scanned on behalf of the Scan Delegator.

2.6. Transport dependencies

This profile requires Bluetooth LE.

This profile should operate over transports that offer one Unenhanced Attribute Protocol (ATT) bearer or one or more Enhanced ATT (EATT) bearers for the Unicast Client role, the Unicast Server role, the Broadcast Sink role, the Broadcast Assistant role, and the Scan Delegator role.

3. Profile support requirements

Requirements in this section are defined as “Mandatory” (M), “Optional” (O), “Excluded” (X), and “Conditional” (C.n). Conditional requirements (C.n) are listed directly below the table in which they appear.

3.1. BAP role support requirements

Table 3.1 defines BAP role support requirements.

|

BAP Role |

Support |

|---|---|

|

Unicast Server |

C.1 |

|

Unicast Client |

C.1 |

|

Broadcast Source |

C.1 |

|

Broadcast Sink |

C.1 |

|

Scan Delegator |

C.1, C.2 |

|

Broadcast Assistant |

C.1 |

C.1: Mandatory to support at least one BAP role.

C.2: If the Broadcast Sink role is supported, the Scan Delegator role shall be supported.

3.2. Service support requirements

Table 3.2 defines dependent service support requirements for the BAP roles that are supported in Table 3.1.

|

Service Role |

BAP Role |

Unicast Server |

Unicast Client |

Broadcast Source |

Broadcast Sink |

Scan Delegator |

Broadcast Assistant |

|---|---|---|---|---|---|---|---|

|

ASCS Client |

X |

M |

X |

X |

X |

X |

|

|

ASCS Server |

M |

X |

X |

X |

X |

X |

|

|

PACS Client |

X |

M |

X |

X |

X |

O |

|

|

PACS Server |

M |

X |

X |

M |

X |

X |

|

|

BASS Client |

X |

X |

X |

X |

X |

M |

|

|

BASS Server |

X |

X |

X |

X |

M |

X |

|

3.3. Audio role support requirements

Table 3.3 defines audio role support requirements for Unicast Client and the Unicast Server.

|

Audio Role |

BAP Role |

Unicast Server |

Unicast Client |

|---|---|---|---|

|

Audio Sink |

C.1 |

C.2 |

|

|

Audio Source |

C.1 |

C.2 |

|

C.1: Mandatory to support at least one of (Audio Source or Audio Sink) when the Unicast Server role is supported.

C.2: Mandatory to support at least one of (Audio Source or Audio Sink) when the Unicast Client role is supported.

3.4. Link Layer feature support requirements

LL feature support is defined in the Bluetooth Core Specification [1], Volume 6, Part B [8].

Table 3.4 defines LL feature support for the BAP roles that are supported in Table 3.1.

|

LL Feature |

BAP Role |

Unicast Server |

Unicast Client |

Broadcast Source |

Broadcast Sink |

Scan Delegator |

Broadcast Assistant |

|---|---|---|---|---|---|---|---|

|

LE Encryption (Section 4.6.1 [8]) |

M |

M |

C.2 |

M |

M |

M |

|

|

LE Extended Advertising (Section 4.6.12 [8]) |

M |

M |

M |

M |

M |

M |

|

|

LE Periodic Advertising (Section 4.6.13 [8]) |

X |

X |

M |

M |

M |

M |

|

|

PAST Sender (Section 4.6.23 [8]) |

X |

X |

X |

X |

X |

O |

|

|

PAST Recipient (Section 4.6.24 [8]) |

X |

X |

X |

X |

O |

X |

|

|

Initiating PAST for local PA (Section 5.1.13 [8]) |

X |

X |

X |

X |

X |

C.1 |

|

|

Initiating PAST for remote PA (Section 5.1.13 [8]) |

X |

X |

X |

X |

X |

C.1 |

|

|

CIS Central (Section 4.6.27 [8]) |

X |

M |

X |

X |

X |

X |

|

|

CIS Peripheral (Section 4.6.27 [8]) |

M |

X |

X |

X |

X |

X |

|

|

Isochronous Broadcaster (Section 4.6.28 [8]) |

X |

X |

M |

X |

X |

X |

|

|

Synchronized Receiver (Section 4.6.29 [8]) |

X |

X |

X |

M |

X |

O |

|

|

Encrypting a Broadcast Isochronous Stream (Section 4.4.6.1, 4.4.6.10 [8]) |

X |

X |

C.3 |

X |

X |

X |

|

|

Non-Encrypted Broadcast Isochronous Stream (Section 4.4.6.1 [8]) |

X |

X |

C.3 |

X |

X |

X |

|

C.1: Mandatory to support at least one of (“Initiating PAST for local PA” or “Initiating PAST for remote PA”) if ”PAST Sender” is supported.

C.2: Mandatory if “Encrypting a Broadcast Audio Stream” is supported, otherwise Optional.

C.3: Mandatory to support at least one of (“Encrypting a Broadcast Audio Stream” or “Non-encrypted Broadcast Audio Stream”) if “Isochronous Broadcaster” is supported, otherwise Excluded.

3.5. Unicast Server support requirements

The Unicast Server shall instantiate one Audio Stream Control Service.

The Unicast Server shall instantiate one Published Audio Capabilities Service.

3.5.1. ATT and EATT transport requirements

The Unicast Server shall support a minimum ATT_MTU of 64 octets for one Unenhanced ATT bearer, or for at least one Enhanced ATT bearer if the Unicast Server supports Enhanced ATT bearers.

3.5.2. Additional Published Audio Capabilities Service requirements

This section defines additional requirements for the Unicast Server beyond those defined in PACS [5].

Table 3.5 shows the Mandatory and Optional audio capability support requirements defined by this profile for the Unicast Server.

If the Unicast Server supports the Audio Sink role, the Unicast Server shall support reception and decoding of audio data that is encoded using the settings defined as Mandatory in Table 3.5. The Unicast Server may support reception and decoding of audio data that is encoded using the settings defined as Optional in Table 3.5 or any other settings defined by an implementation or by a higher-layer specification.

If the Unicast Server supports the Audio Sink role, the Unicast Server shall expose all supported audio capability settings for the Audio Sink role in one or more Sink PAC characteristics containing one or more PAC records.

If the Unicast Server supports the Audio Source role, the Unicast Server shall support encoding and transmission of audio data using the settings defined as Mandatory in Table 3.5. The Unicast Server may support encoding and transmission of audio data using the settings defined as Optional in Table 3.5 or any other settings defined by an implementation or a higher-layer specification.

If the Unicast Server supports the Audio Source role, the Unicast Server shall expose all supported audio capability settings for the Audio Source role in one or more Source PAC characteristics containing one or more PAC records.

|

Codec Capability Setting |

Codec_ID |

Codec_Specific_Capabilities (Defined in PACS [5]) |

Requirement |

|||

|---|---|---|---|---|---|---|

|

Supported_Sampling_Frequencies (kHz) (Section Section 4.3.1) |

Supported_Frame_Durations (ms) (Section Section 4.3.1) |

Supported_Octets_per_Codec_Frame (Octets) (Section Section 4.3.1) |

||||

|

Audio Sink |

Audio Source |

|||||

|

8_1 |

LC3 |

8 |

7.5 |

261 (27.734 kbps2) |

O |

O |

|

8_2 |

LC3 |

8 |

10 |

301 (24 kbps2) |

O |

O |

|

16_1 |

LC3 |

16 |

7.5 |

301 (32 kbps2) |

O |

O |

|

16_2 |

LC3 |

16 |

10 |

401 (32 kbps2) |

M |

M |

|

24_1 |

LC3 |

24 |

7.5 |

451 (48 kbps2) |

O |

O |

|

24_2 |

LC3 |

24 |

10 |

601 (48 kbps2) |

M |

O |

|

32_1 |

LC3 |

32 |

7.5 |

601 (64 kbps2) |

O |

O |

|

32_2 |

LC3 |

32 |

10 |

801 (64 kbps2) |

O |

O |

|

441_1 |

LC3 |

44.1 |

8.1633 |

971 (95.06 kbps2) |

O |

O |

|

441_2 |

LC3 |

44.1 |

10.8843 |

1301 (95.55 kbps2) |

O |

O |

|

48_1 |

LC3 |

48 |

7.5 |

751 (80 kbps2) |

O |

O |

|

48_2 |

LC3 |

48 |

10 |

1001 (80 kbps2) |

O |

O |

|

48_3 |

LC3 |

48 |

7.5 |

901 (96 kbps2) |

O |

O |

|

48_4 |

LC3 |

48 |

10 |

1201 (96 kbps2) |

O |

O |

|

48_5 |

LC3 |

48 |

7.5 |

1171 (124.8 kbps2) |

O |

O |

|

48_6 |

LC3 |

48 |

10 |

1551 (124 kbps2) |

O |

O |

|

1 The supported range shall include this value. 2 Bit rates are calculated according to Section 3.2.5 in [7]. 3 Effective frame durations. The 44.1 kHz sampling rate results in a deviation from the 7.5 ms/10 ms frame durations that can be exposed using the Supported_Frame_Durations LTV structure. For 44.1 kHz/7.5ms the actual frame duration is equivalent to 360 (samples per frame) divided by 44100 (samples per second), which equals 8.16327 ms per frame, and for 44.1 kHz/10 ms the actual frame duration is equal to 480 (samples per frame) divided by 44100 (samples per second), which equals 10.88435 ms per frame. The LC3 [7] codec encodes 97 octets (for 7.5 ms/8.163 ms effective) or 130 octets (for 10 ms/10.884 ms effective) into each SDU, which arrives at the controller every 8.16327 ms or 10.88435 ms. The transmitting device assigns a time offset to each SDU and delivers the time_offset with each SDU at the receiver, as defined in Volume 6, Part G, Section 3.1 in [1]. Determination of the time_offset parameter at the transmitting device is implementation-specific. Compensation for the difference between 8.16327 ms and 8.163 ms, and/or compensation between 10.88435 ms and 10.884 ms, is implementation-specific. |

||||||

If the Unicast Server supports vendor-specific codec audio capabilities, the Unicast Server shall use the format defined in Table 3.6 when populating the Codec_ID field in PAC records exposing vendor-specific audio capabilities.

|

Parameter |

Value |

|---|---|

|

Codec_ID |

Octet 0: 0xFF = Vendor-specific Coding_Format Octet 1–2: Company ID Company ID values are defined in Bluetooth Assigned Numbers [2]. Octet 3–4: Vendor-specific codec_ID |

3.5.2.1. Audio data Context Type requirements

If the Unicast Server supports the Audio Sink role, the Unicast Server shall support the Context Type value defined as ‘unspecified’ in the Supported_Sink_Contexts field of the Supported Audio Contexts characteristic.

If the Unicast Server supports the Audio Source role, the Unicast Server shall support the Context Type value defined as ‘unspecified’ in the Supported_Source_Contexts field of the Supported Audio Contexts characteristic.

3.5.3. Additional Audio Stream Control Service requirements

This section defines additional requirements for the Unicast Server beyond those requirements defined in ASCS [4].

If the Unicast Server supports the Audio Sink role:

-

The Unicast Server shall support receiving multiple Audio Channels if the Unicast Server exposes more than one bit set to 0b1 in the Sink Audio Locations [5] characteristic value.

-

If the Unicast Server supports receiving multiple Audio Channels, the Unicast Server shall expose a number of Sink ASE [4] characteristics sufficient to transport audio data for the highest number of supported Audio Channels.

-

Support of multiplexing of Audio Channels for a Sink ASE is determined by exposing a value of the Supported_Audio_Channel_Counts (see Section 4.3.1) LTV structure greater than 1 in any Sink PAC [5] characteristic.

-

If multiplexing of Audio Channels is not supported, the number of Sink ASEs shall be equal to or greater than the number of bits set to 0b1 in the Sink Audio Locations characteristic value.

-

If multiplexing of Audio Channels is supported, the number of Sink ASEs shall be equal to or greater than the number of bits set to 0b1 in the Sink Audio Locations characteristic value divided by the highest number of Audio Channels supported in the Supported_Audio_Channel_Counts LTV.

-

If the Unicast Server supports the Audio Source role:

-

The Unicast Server shall support transmitting multiple Audio Channels if the Unicast Server exposes more than one bit set to 0b1 in the Source Audio Locations [5] characteristic value.

-

If the Unicast Server supports transmitting multiple Audio Channels, the Unicast Server shall expose a number of Source ASE [4] characteristics sufficient to transport audio data for the highest number of supported Audio Channels.

-

Support of multiplexing of Audio Channels for a Source ASE is determined by exposing a value of the Supported_Audio_Channel_Counts (see Section 4.3.1) LTV structure greater than 1 in any Source PAC [5] characteristic.

-

If multiplexing of Audio Channels is not supported, the number of Source ASEs shall be equal to or greater than the number of bits set to 0b1 in the Source Audio Locations characteristic value.

-

If multiplexing of Audio Channels is supported, the number of Source ASEs shall be equal to or greater than the number of bits set to 0b1 in the Source Audio Locations characteristic value divided by the highest number of Audio Channels supported in the Supported_Audio_Channel_Counts LTV.

-

To inform unconnected Unicast Clients that the Unicast Server is connectable and available to receive or transmit audio data for specific Context Type values (see Section 5.4), the Unicast Server shall transmit connectable extended advertising PDUs that contain the Service Data AD data type (see [3]), including additional service data defined in Table 3.7.

A Targeted Announcement (Announcement Type = 0x01) means the Unicast Server is connectable and is requesting a connection.

A General Announcement (Announcement Type = 0x00) means the Unicast Server is connectable but is not requesting a connection.

The AD format shown in Table 3.7 is defined in Volume 3, Part C, Section 11 in [1].

|

Field |

Size (Octets) |

Description |

|

|---|---|---|---|

|

Length |

1 |

Length of Type and Value fields for AD data type |

|

|

Type: «Service Data - 16-bit UUID» |

1 |

Defined in Bluetooth Assigned Numbers [2] |

|

|

Value |

Varies |

2-octet Service UUID followed by additional service data |

|

|

Audio Stream Control Service UUID |

2 |

Defined in Bluetooth Assigned Numbers [2] |

|

|

Announcement Type |

1 |

0x00 = General Announcement 0x01 = Targeted Announcement |

|

|

Available Audio Contexts |

4 |

Available Audio Contexts characteristic [5] value |

|

|

Metadata_Length |

1 |

Length of the Metadata field |

|

|

Metadata |

Varies |

LTV-formatted Metadata Shall exist only if the Metadata_Length parameter value is ≠ 0x00 |

|

3.6. Unicast Client support requirements

This section defines support requirements for the Unicast Client role.

3.6.1. ATT and EATT transport requirements

The Unicast Client shall support a minimum ATT_MTU of 64 octets for one Unenhanced ATT bearer, or for at least one Enhanced ATT bearer if the Unicast Client supports Enhanced ATT bearers.

3.6.2. Additional GATT sub-procedure requirements

GATT sub-procedure support requirements on Unenhanced ATT bearers required by all GATT clients are defined in Volume 3, Part G, Section 4.2 in [1].

The Unicast Client shall support the additional GATT sub-procedure requirements defined in Table 3.8.

Requirements in this section represent a minimum set of requirements for a client. Other GATT sub-procedures may be used if supported by both the client and the server.

|

GATT Sub-Procedure |

Requirement |

|---|---|

|

Exchange MTU |

M |

|

Discover All Primary Services |

C.1 |

|

Discover Primary Services by Service UUID |

C.1 |

|

Discover All Characteristics of a Service |

C.2 |

|

Discover Characteristic by UUID |

C.2 |

|

Discover All Characteristic Descriptors |

M |

|

Read Characteristic Value |

M |

|

Write Characteristic Value |

M |

|

Write Without Response |

M |

|

Write Long Characteristic Values |

M |

|

Notifications |

M |

|

Read Characteristic Descriptors |

M |

|

Write Characteristic Descriptors |

M |

C.1: Mandatory to support at least one Primary Service Discovery procedure.

C.2: Mandatory to support at least one Characteristic Discovery procedure.

3.6.3. Service and characteristic discovery support requirements

The Unicast Client shall support the service and characteristic discovery procedures defined in Table 3.9.

|

Procedure |

Section Reference |

Requirement |

|

|---|---|---|---|

|

Service discovery |

M |

||

|

Published Audio Capabilities Service discovery |

M |

||

|

Audio Stream Control Service discovery |

M |

||

|

Characteristic discovery |

M |

||

|

Published Audio Capabilities Service characteristic discovery |

M |

||

|

Audio Stream Control Service characteristic discovery |

M |

||

3.6.4. Characteristic support requirements

The Unicast Client characteristic support requirements are defined in Table 3.10.

|

Characteristic |

Section Reference |

Requirement |

|---|---|---|

|

Sink PAC |

C.1 |

|

|

Sink Audio Locations |

C.1 |

|

|

Source PAC |

C.2 |

|

|

Source Audio Locations |

C.2 |

|

|

Supported Audio Contexts |

M |

|

|

Available Audio Contexts |

M |

|

|

ASE Control Point |

M |

|

|

Sink ASE |

C.1 |

|

|

Source ASE |

C.2 |

C.1: Mandatory to support if the Unicast Client supports the Audio Source role, otherwise Excluded.

C.2: Mandatory to support if the Unicast Client supports the Audio Sink role, otherwise Excluded.

3.6.5. Service discovery

This section defines service discovery procedures for the Unicast Client role.

3.6.5.1. Published Audio Capabilities Service discovery

The Unicast Client shall use either the GATT Discover All Primary Services sub-procedure or the GATT Discover Primary Services by Service UUID sub-procedure to discover the Published Audio Capabilities Service.

3.6.5.2. Audio Stream Control Service discovery

The Unicast Client shall use either the GATT Discover All Primary Services sub-procedure or the GATT Discover Primary Services by Service UUID sub-procedure to discover the Audio Stream Control Service.

3.6.6. Characteristic discovery

This section defines characteristic discovery procedures for the Unicast Client.

3.6.6.1. Published Audio Capabilities Service characteristic discovery

The Unicast Client shall use either the GATT Discover All Characteristics of a Service sub-procedure or the GATT Discover Characteristics by Characteristic UUID sub-procedure to discover Published Audio Capabilities Service characteristics.

For each discovered Published Audio Capabilities Service characteristic, if the characteristic properties include support for notifications, the Unicast Client shall use the Discover All Characteristic Descriptors sub-procedure to discover the Client Characteristic Configuration descriptor for that characteristic.

To configure a Published Audio Capabilities Service characteristic for notifications, the Unicast Client shall use the GATT Write Characteristic Descriptors sub-procedure to write to the Client Characteristic Configuration descriptor for that characteristic.

3.6.6.1.1. Sink PAC characteristic discovery

If the Unicast Client supports the Audio Source role, the Unicast Client shall discover all instances of the Sink PAC characteristic.

For each discovered Sink PAC characteristic, if the characteristic properties include support for notifications, the Unicast Client shall configure the Sink PAC characteristic for notifications.

3.6.6.1.2. Sink Audio Locations characteristic discovery

If the Unicast Client supports the Audio Source role, the Unicast Client shall discover the Sink Audio Locations characteristic.

If the Unicast Client discovers the Sink Audio Locations characteristic, and the characteristic properties include support for notifications, the Unicast Client shall configure the Sink Audio Locations characteristic for notifications.

3.6.6.1.3. Source PAC characteristic discovery

If the Unicast Client supports the Audio Sink role, the Unicast Client shall discover all instances of the Source PAC characteristic.

For each discovered Source PAC characteristic, if the characteristic properties include support for notifications, the Unicast Client shall configure the Source PAC characteristic for notifications.

3.6.6.1.4. Source Audio Locations characteristic discovery

If the Unicast Client supports the Audio Sink role, the Unicast Client shall discover the Source Audio Locations characteristic.

If the Unicast Client discovers the Source Audio Locations characteristic, and the characteristic properties include support for notifications, the Unicast Client shall configure the Source Audio Locations characteristic for notifications.

3.6.6.1.5. Supported Audio Contexts characteristic discovery

The Unicast Client shall discover the Supported Audio Contexts characteristic.

If the Unicast Client discovers the Supported Audio Contexts characteristic, and the characteristic properties include support for notifications, the Unicast Client shall configure the Supported Audio Contexts characteristic for notifications.

3.6.6.1.6. Available Audio Contexts characteristic discovery

The Unicast Client shall discover the Available Audio Contexts characteristic.

The Unicast Client shall configure the Available Audio Contexts characteristic for notifications.

3.6.6.2. Audio Stream Control Service characteristic discovery

The Unicast Client shall use either the GATT Discover All Characteristics of a Service sub-procedure or the GATT Discover Characteristics by Characteristic UUID sub-procedure to discover Audio Stream Control Service characteristics.

For each discovered Audio Stream Control Service characteristic, if the characteristic properties include support for notifications, the Unicast Client shall use the Discover All Characteristic Descriptors sub-procedure to discover the Client Characteristic Configuration descriptor for that characteristic.

To configure an Audio Stream Control Service characteristic for notifications, the Unicast Client shall use the GATT Write Characteristic Descriptors sub-procedure to write to the Client Characteristic Configuration descriptor for that characteristic.

3.6.6.2.1. ASE Control Point characteristic discovery

The Unicast Client shall discover the ASE Control Point characteristic.

The Unicast Client shall configure the ASE Control Point characteristic for notifications.

3.6.6.2.2. Sink ASE characteristic discovery

If the Unicast Client supports the Audio Source role, the Unicast Client shall discover all instances of the Sink ASE characteristic.

The Unicast Client shall configure all instances of the Sink ASE characteristic for notifications.

3.6.6.2.3. Source ASE characteristic discovery

If the Unicast Client supports the Audio Sink role, the Unicast Client shall discover all instances of the Source ASE characteristic.

The Unicast Client shall configure all instances of the Source ASE characteristic for notifications.

3.6.7. Unicast Client audio capability configuration support

Table 3.11 shows the Mandatory and Optional audio capability configuration support settings defined by this profile for the Unicast Client.

If the Unicast Client supports the Audio Source role, the Unicast Client shall support encoding and transmission of audio data using the settings defined as Mandatory in Table 3.11. The Unicast Client may support encoding and transmission of audio data using the settings defined as Optional in Table 3.11 or any other settings defined by an implementation or by a higher-layer specification.

If the Unicast Client supports the Audio Sink role, the Unicast Client shall support reception and decoding of audio data encoded using the settings defined as Mandatory in Table 3.11. The Unicast Client may support reception and decoding of audio data encoded using the settings defined as Optional in Table 3.11 or any other settings defined by an implementation or by a higher-layer specification.

|

Codec Configuration Setting |

Codec_ID |

Codec-Specific Configuration (Defined in ASCS [4]) |

Requirement |

|||

|---|---|---|---|---|---|---|

|

Sampling_Frequency (kHz) (Section 4.3.2) |

Frame_Duration (ms) (Section 4.3.2) |

Octets per_Codec_Frame (Octets) (Section 4.3.2) |

||||

|

Audio Sink |

Audio Source |

|||||

|

8_1 |

LC3 |

8 |

7.5 |

26 (27.7341 kbps) |

O |

O |

|

8_2 |

LC3 |

8 |

10 |

30 (241 kbps) |

O |

O |

|

16_1 |

LC3 |

16 |

7.5 |

30 (321 kbps) |

O |

O |

|

16_2 |

LC3 |

16 |

10 |

40 (321 kbps) |

M |

M |

|

24_1 |

LC3 |

24 |

7.5 |

45 (481 kbps) |

O |

O |

|

24_2 |

LC3 |

24 |

10 |

60 (481 kbps) |

M |

O |

|

32_1 |

LC3 |

32 |

7.5 |

60 (641 kbps) |

O |

O |

|

32_2 |

LC3 |

32 |

10 |

80 (641 kbps) |

O |

O |

|

441_1 |

LC3 |

44.1 |

8.1632 |

97 (95.061 kbps) |

O |

O |

|

441_2 |

LC3 |

44.1 |

10.8842 |

130 (95.551 kbps) |

O |

O |

|

48_1 |

LC3 |

48 |

7.5 |

75 (801 kbps) |

O |

O |

|

48_2 |

LC3 |

48 |

10 |

100 (801 kbps) |

O |

O |

|

48_3 |

LC3 |

48 |

7.5 |

90 (961 kbps) |

O |

O |

|

48_4 |

LC3 |

48 |

10 |

120 (961 kbps) |

O |

O |

|

48_5 |

LC3 |

48 |

7.5 |

117 (124.81 kbps) |

O |

O |

|

48_6 |

LC3 |

48 |

10 |

155 (1241 kbps) |

O |

O |

|

1 Bit rates are calculated according to Section 3.2.5 in [7]. 2 Effective frame durations. The 44.1 kHz sampling rate results in a deviation from the 7.5 ms/10 ms frame durations that can be configured using the Frame_Duration LTV structure. For 44.1 kHz/7.5ms the actual frame duration is equivalent to 360 (samples per frame) divided by 44100 (samples per second), which equals 8.16327 ms per frame, and for 44.1 kHz/10 ms the actual frame duration is equal to 480 (samples per frame) divided by 44100 (samples per second), which equals 10.88435 ms per frame. The LC3 [7] codec encodes 97 octets (for 7.5 ms/8.163 ms effective) or 130 octets (for 10 ms/10.884 ms effective) into each SDU, which arrives at the controller every 8.16327 ms or 10.88435 ms. The transmitting device assigns a time offset to each SDU and delivers the time_offset with each SDU at the receiver, as defined in Volume 6, Part G, Section 3.1 in [1]. Determination of the time_offset parameter at the transmitting device is implementation-specific. Compensation for the difference between 8.16327 ms and 8.163 ms, and/or compensation between 10.88435 ms and 10.884 ms, is implementation-specific. |

||||||

3.7. Broadcast Source support requirements

This section defines support requirements for the Broadcast Source role.

3.7.1. Broadcast Source audio capability configuration support

The Broadcast Source shall support encoding and transmission of audio data using the settings defined as Mandatory in Table 3.12. The Broadcast Source may support encoding and transmission of audio data using the settings defined as Optional in Table 3.12 or any other settings defined by an implementation or by a higher-layer specification.

|

Codec Configuration Setting |

Codec_ID |

Codec-Specific Configuration (see Table 3.16) |

Requirement |

||

|---|---|---|---|---|---|

|

Sampling_Frequency (kHz) (Section 4.3.2) |

Frame_Duration (ms) (Section 4.3.2) |

Octets per_Codec_Frame (Octets) (Section 4.3.2) |

|||

|

8_1 |

LC3 |

8 |

7.5 |

26 (27.7341 kbps) |

O |

|

8_2 |

LC3 |

8 |

10 |

30 (241 kbps) |

O |

|

16_1 |

LC3 |

16 |

7.5 |

30 (321 kbps) |

O |

|

16_2 |

LC3 |

16 |

10 |

40 (321 kbps) |

M |

|

24_1 |

LC3 |

24 |

7.5 |

45 (481 kbps) |

O |

|

24_2 |

LC3 |

24 |

10 |

60 (481 kbps) |

O |

|

32_1 |

LC3 |

32 |

7.5 |

60 (641 kbps) |

O |

|

32_2 |

LC3 |

32 |

10 |

80 (641 kbps) |

O |

|

441_1 |

LC3 |

44.1 |

8.1632 |

97 (95.061 kbps) |

O |

|

441_2 |

LC3 |

44.1 |

10.8842 |

130 (95.55 kbps) |

O |

|

48_1 |

LC3 |

48 |

7.5 |

75 (801 kbps) |

O |

|

48_2 |

LC3 |

48 |

10 |

100 (801 kbps) |

O |

|

48_3 |

LC3 |

48 |

7.5 |

90 (961 kbps) |

O |

|

48_4 |

LC3 |

48 |

10 |

120 (961 kbps) |

O |

|

48_5 |

LC3 |

48 |

7.5 |

117 (124.81 kbps) |

O |

|

48_6 |

LC3 |

48 |

10 |

155 (1241 kbps) |

O |

|

1 Bit rates are calculated according to Section 3.2.5 in [7]. 2 Effective frame durations. The 44.1 kHz sampling rate results in a deviation from the 7.5 ms/10 ms frame durations that can be configured using the Frame_Duration LTV structure. For 44.1 kHz/7.5ms the actual frame duration is equivalent to 360 (samples per frame) divided by 44100 (samples per second), which equals 8.16327 ms per frame, and for 44.1 kHz/10 ms the actual frame duration is equal to 480 (samples per frame) divided by 44100 (samples per second), which equals 10.88435 ms per frame. The LC3 [7] codec encodes 97 octets (for 7.5 ms/8.163 ms effective) or 130 octets (for 10 ms/10.884 ms effective) into each SDU, which arrives at the controller every 8.16327 ms or 10.88435 ms. The transmitting device assigns a time offset to each SDU and delivers the time_offset with each SDU at the receiver, as defined in Volume 6, Part G, Section 3.1 in [1]. Determination of the time_offset parameter at the transmitting device is implementation-specific. Compensation for the difference between 8.16327 ms and 8.163 ms, and/or compensation between 10.88435 ms and 10.884 ms, is implementation-specific. |

|||||

The Broadcast Source may support encoding and transmission of audio data encoded using vendor-specific codec audio capability settings. If the Broadcast Source transmits audio data encoded using vendor-specific codec audio capability settings, the Broadcast Source shall use the format defined in Table 3.13 when populating the Codec_ID field.

|

Parameter |

Value |

|---|---|

|

Codec_ID |

Octet 0: 0xFF = Vendor-specific Coding_Format Octet 1–2: Company ID Company ID values are defined in Bluetooth Assigned Numbers [2]. Octet 3–4: Vendor-specific_codec_ID |

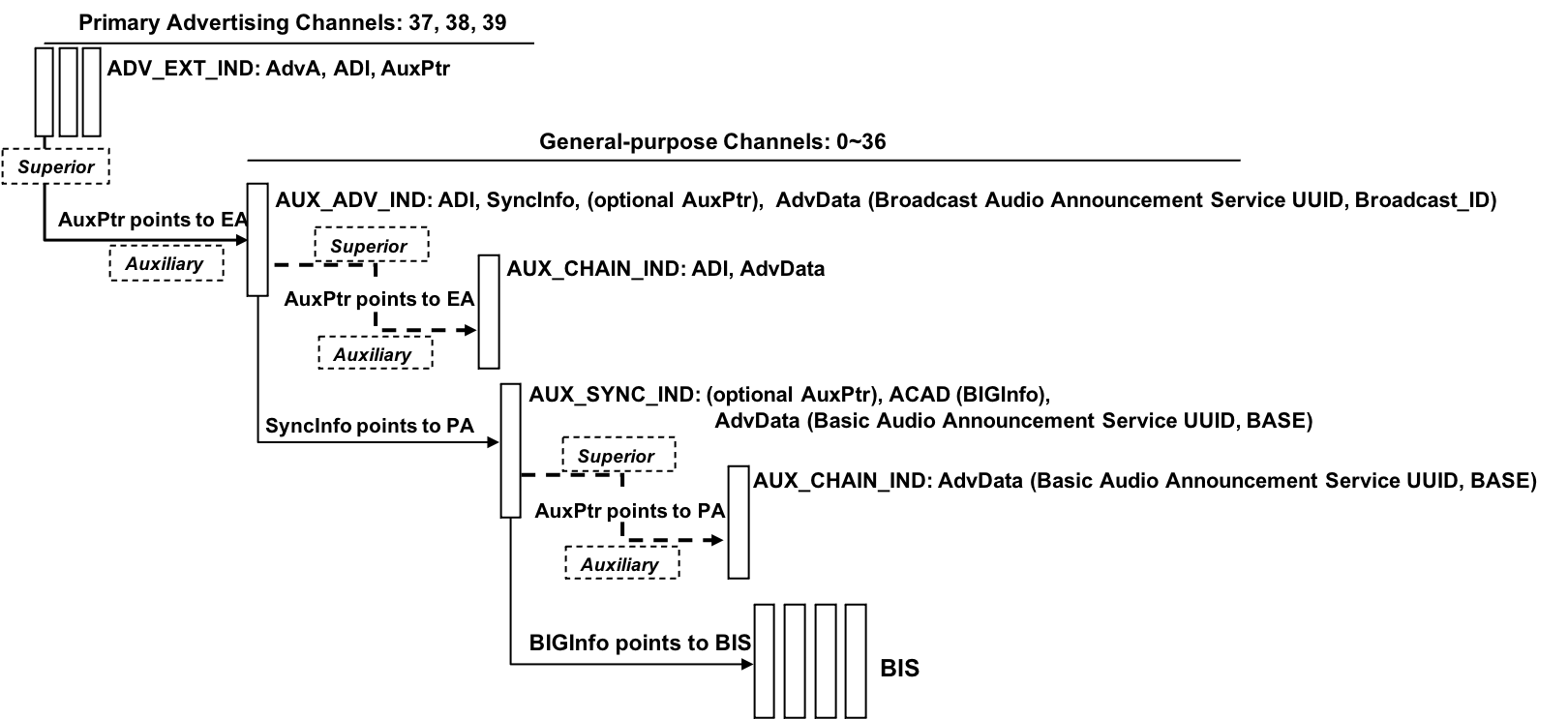

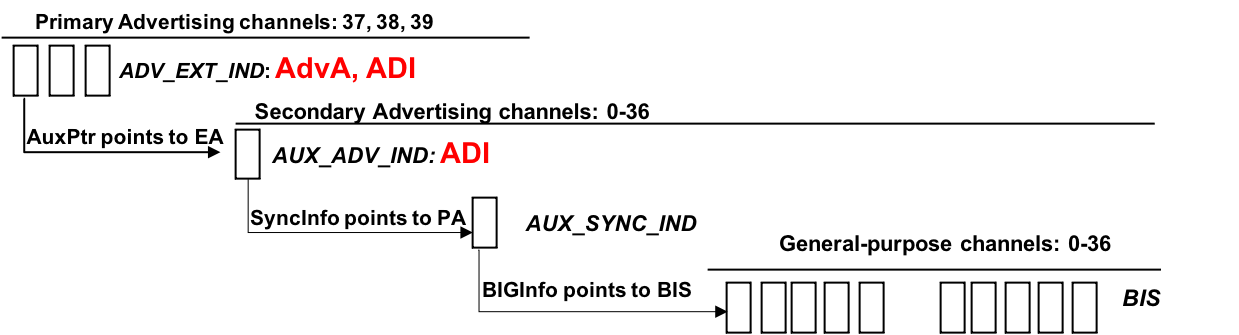

3.7.2. Audio announcements

There are two types of audio announcements that are used in this profile for broadcast audio: Broadcast Audio Announcements (defined in Section 3.7.2.1) and Basic Audio Announcements (defined in Section 3.7.2.2).

Broadcast Audio Announcements are used to inform scanning devices that a periodic advertising train (PA), transmitted by the device that transmits the Broadcast Audio Announcement, is associated with a BIG that transports one or more broadcast Audio Streams.

Basic Audio Announcements expose broadcast Audio Stream parameters.

3.7.2.1. Broadcast Audio Announcements

To associate a PA, used to expose broadcast Audio Stream parameters, with a broadcast Audio Stream, the Broadcast Source shall transmit EA PDUs that include the data defined in Table 3.14. Implementations or higher-layer specifications may define additional service data that follows the Broadcast_ID parameter to be included in the EA PDUs transmitted by the Broadcast Source.

The AD data format shown in Table 3.14 is defined in Volume 3, Part C, Section 11 in [1].

|

Parameter |

Size (Octets) |

Description |

|

|---|---|---|---|

|

Length |

1 |

Length of Type and Value fields for AD data type |

|

|

Type: «Service Data - 16-bit UUID» |

1 |

Defined in Bluetooth Assigned Numbers [2] |

|

|

Value |

Varies |

2-octet Service UUID followed by the Broadcast_ID and any additional service data |

|

|

Broadcast Audio Announcement Service UUID |

2 |

Defined in Bluetooth Assigned Numbers [2] |

|

|

Broadcast_ID |

3 |

||

3.7.2.1.1. Broadcast_ID

For each BIG, the Broadcast Source shall generate a Broadcast_ID according to the requirements for random number generation as defined in Volume 3, Part H, Section 2 in [1]. The Broadcast_ID shall not change for the lifetime of the BIG.

3.7.2.2. Basic Audio Announcements

The Broadcast Source shall use the parameters defined in Table 3.15 when transmitting periodic advertising PDUs used to expose broadcast Audio Stream parameters.

The parameters in Table 3.15 that follow the Basic Audio Announcement Service UUID are defined as the Broadcast Audio Source Endpoint (BASE) structure.

The AD format shown in Table 3.15 is defined in Volume 3, Part C, Section 11 in [1].

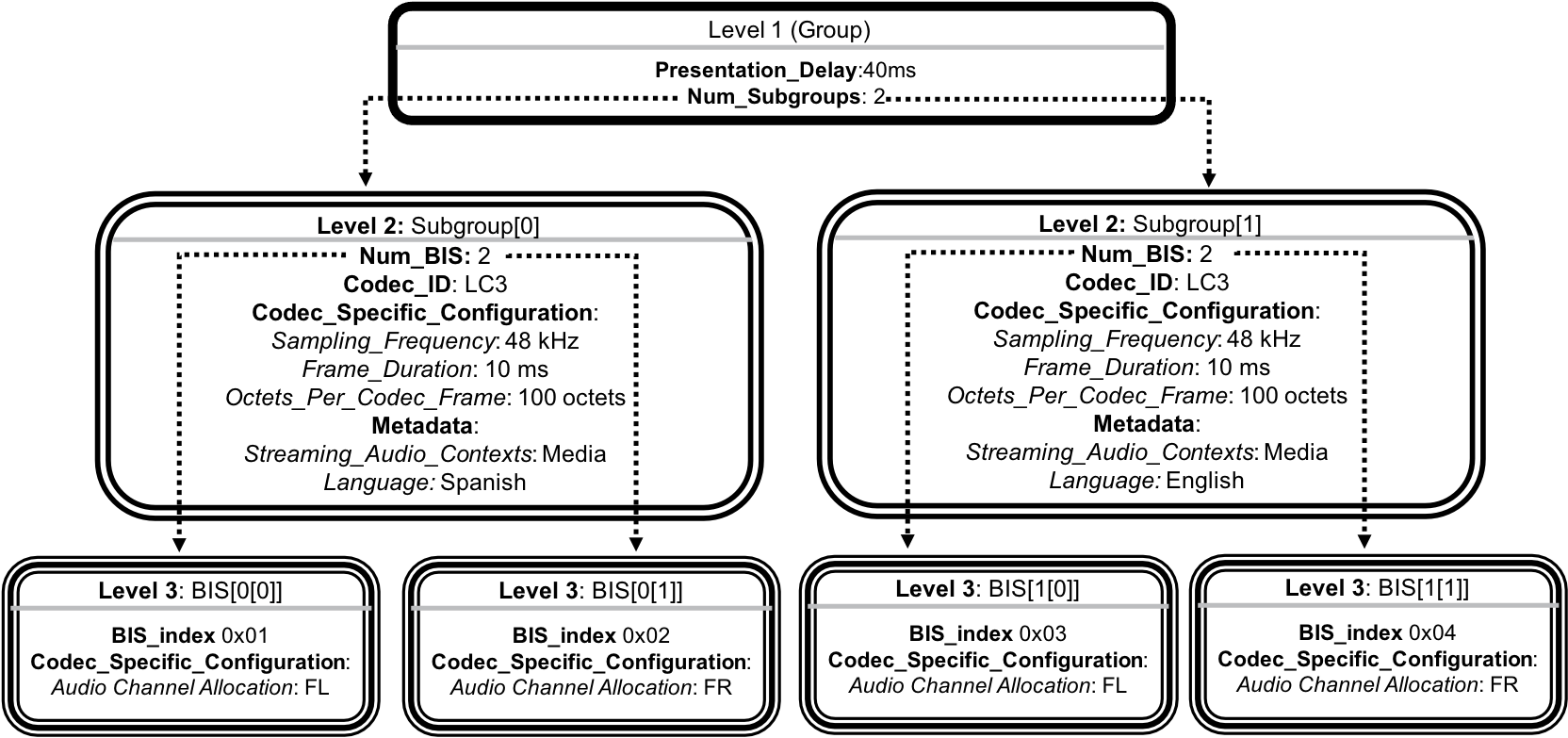

There are three numerically hierarchal levels to the BASE structure and the parameters contained within:

-

Level 1: Group level. The BIG is the group.

-

Level 2: Subgroup level. A subgroup is a collection of one or more BISes present in the BIG.

-

Level 3: BIS level.

The following rules shall be met when populating the BASE:

-

Rule 1: There shall be at least one subgroup.

-

Rule 2: There shall be at least one BIS per subgroup.

-

Rule 3: Every BIS in the BIG, denoted by its BIS_index value, shall only be present in one subgroup.

-

Rule 4: Codec_Specific_Configuration parameters shall be present at Level 2 and may be present at Level 3. If an identical Codec_Specific_Configuration parameter value is present at Level 2 and at Level 3, the Codec_Specific_Configuration parameter value at Level 3 shall be treated as the only instance of that Codec_Specific_Configuration parameter value present. Where a Codec_Specific_Configuration parameter value includes length-type-value (LTV) structures, an LTV structure shall be considered an identical parameter to another LTV structure with the same Type field value, and the Value field of the LTV structure at Level 3 shall be treated as the only instance of that Value field that is present.

-

Rule 5: Metadata_Length and Metadata parameter values may be changed while a broadcast Audio Stream is in the Streaming state (see Section 6.2.1). Changes to any other parameter values shall not occur while a broadcast Audio Stream is in the Streaming state.

|

Level |

Parameter |

Size (Octets) |

Description |

|

|---|---|---|---|---|

|

Length |

1 |

Length of Type and Value fields for AD data type |

||

|

Type: «Service Data - 16-bit UUID» |

1 |

Defined in Bluetooth Assigned Numbers [2] |

||

|

Value |

Varies |

2-octet Service UUID followed by additional service data |

||

|

1 |

Basic Audio Announcement Service UUID |

2 |

Defined in Bluetooth Assigned Numbers [2] |

|

|

1 |

Presentation_Delay |

3 |

See Section 7 for description. Range: 0x000000 – 0xFFFFFF Units: µs All other values: RFU |

|

|

1 |

Num_Subgroups |

1 |

Number of subgroups used to group BISes present in the BIG Shall be at least 1, as defined by Rule 1 |

|

|

2 |

Num_BIS[i] |

1 |

Number of BISes in the [ith] subgroup Shall be at least 1, as defined by Rule 2 |

|

|

2 |

Codec_ID[i] |

5 |

Codec information for the [ith] subgroup Octet 0: Coding_Format Coding_Format values are defined in Bluetooth Assigned Numbers [2]. Octet 1–2: Company ID Company identifier values are defined in Bluetooth Assigned Numbers [2]. Shall be 0x0000 if octet 0 ≠ 0xFF Octet 3–4: Vendor-specific codec_ID Shall be 0x0000 if octet 0 ≠ 0xFF |

|

|

2 |

Codec_Specific_Configuration_Length[i] |

1 |

Length of the Codec_Specific_Configuration for the [ith] subgroup |

|

|

2 |

Codec_Specific_Configuration[i] |

Varies |

Codec-specific configuration parameters for the [ith] subgroup Shall exist only if the Codec_Specific_Configuration_Length[i] ≠ 0x00 |

|

|

2 |

Metadata_Length[i] |

1 |

Length of the Metadata for the [ith] subgroup |

|

|

2 |

Metadata[i] |

Varies |

Series of LTV structures containing Metadata for the [ith] subgroup Shall exist only if the Metadata_Length[i] ≠ 0x00 |

|

|

3 |

BIS_index[i[k]] |

1 |

BIS_index value for the [kth] BIS in the [ith] subgroup |

|

|

3 |

Codec_Specific_Configuration_Length[i[k]] |

1 |

Length of the Codec_Specific_Configuration for the [kth] BIS in the [ith] subgroup |

|

|

3 |

Codec_Specific_Configuration[i[k]] |

Varies |

Codec-specific configuration parameters for the [kth] BIS in the [ith] subgroup Shall exist only if the Codec_Specific_Configuration_Length[i[k]] ≠ 0x00 |

|

A logical example of a BASE structure is shown in Figure 3.1. In the example in Figure 3.1, the Broadcast Source is a television that transmits a BIG with four BISes, with each BIS representing a different language and Audio Location. There are two subgroups, each with two BISes.

The boxes with a single-line border represent Level 1 parameters, the boxes with a double-line border represent Level 2 parameters, and the boxes with a triple-line border represent Level 3 parameters.

Subgroup[0] has two BISes: BIS_Index[0] (BIS_index 0x01) and BIS_Index[1] (BIS_index 0x02), representing Media, Spanish language, FL, and FR for Subgroup[0].

Subgroup[1] has two BISes: BIS_Index[0] (BIS_index 0x03) and BIS_Index[1] (BIS_index 0x04), representing Media, English language, FL, and FR for Subgroup[1].

The Codec_ID and the Codec_Specific_Configuration (Sampling_Frequency (Section 4.3.2), Frame_Duration (Section 4.3.2), Octets_Per_Codec_Frame (Section 4.3.2)) parameter values at Level 2 apply to the respective subgroups and their respective BISes for which they have been defined.

The Metadata (Streaming_Audio_Contexts [2], Language [2]) parameter values at Level 2 apply to the respective subgroups and their respective BISes for which they have been defined.

The Codec_Specific_Configuration (Audio_Channel_Allocation (Section 4.3.2)) parameter values at Level 3 apply to the specific BIS_index values for which they have been defined in addition to the Codec_Specific_Configuration parameter values defined at Level 2.

The table structure for the example BASE illustrated in Figure 3.1 is shown in Table 3.16.

|

Level |

Parameter |

Size (Octets) |

Value |

|

|---|---|---|---|---|

|

Length |

1 |

Length of Type and Value fields for AD data type: 0x5D = 93 octets |

||

|

Type: «Service Data - 16-bit UUID» |

1 |

Defined in Bluetooth Assigned Numbers [2] |

||

|

Value |

92 |

2-octet Service UUID followed by additional service data |

||

|

Basic Audio Announcement Service UUID |

2 |

Defined in Bluetooth Assigned Numbers [2] |

||

|

1 |

Presentation_Delay |

3 |

40 ms |

|

|

1 |

Num_Subgroups |

1 |

0x02: 2 Subgroups |

|

|

2 |

Num_BIS[0] |

1 |

0x02: 2 BIS in Subgroup[0] |

|

|

2 |

Codec_ID[0] |

5 |

Octet 0: 0x06 = LC3 Coding_Format Octet 1–2: 0x0000 Octet 3–4: 0x0000 |

|

|

2 |

Codec_Specific_Configuration_Length[0] |

1 |

Length of the Codec_Specific_Configuration for Subgroup[0]: 0x0A octets |

|

|

2 |

Codec_Specific_Configuration[0] |

10 |

3 LTV structures for Subgroup[0] defining: LTV 1: Sampling_Frequency: 48000 Hz LTV 2: Frame_Duration: 10 ms LTV 3: Octets_Per_Codec_Frame: 100 octets |

|

|

2 |

Metadata_Length[0] |

1 |

Length of Subgroup[0] Metadata: 0x09 octets |

|

|

2 |

Metadata[0] |

9 |

2 LTV structures for Subgroup[0], defining: LTV 1: Streaming_Audio_Contexts: Media LTV 2: Language: Spanish |

|

|

3 |

BIS_index[0[0]] |

1 |

0x01 |

|

|

3 |

Codec_Specific_Configuration_Length[0[0]] |

1 |

Length of the Codec_Specific_Configuration for BIS_index 0x01: 0x06 octets |

|

|

3 |

Codec_Specific_Configuration[0[0]] |

6 |

1 LTV structure for BIS_Index 0x01, defining: LTV 1 = Audio_Channel_Allocation: FL |

|

|

3 |

BIS_index[0[1]] |

1 |

0x02 |

|

|

3 |

Codec_Specific_Configuration_Length[0[1]] |

1 |

Length of the Codec_Specific_Configuration for BIS_index 0x02: 0x06 octets |

|

|

3 |

Codec_Specific_Configuration[0[1]] |

6 |

1 LTV structure for BIS_Index 0x02, defining: LTV 1 = Audio_Channel_Allocation: FR |

|

|

2 |

Num_BIS[1] |

1 |

0x02: 2 BIS in Subgroup[1] |

|

|

2 |

Codec_ID[1] |

5 |

Octet 0: 0x06 = LC3 Coding_Format Octet 1–2: 0x0000 Octet 3–4: 0x0000 |

|

|

2 |

Codec_Specific_Configuration_Length[1] |

1 |

Length of the Codec_Specific_Configuration for Subgroup[1]: 0x0A octets |

|

|

2 |

Codec_Specific_Configuration[1] |

10 |

3 LTV structures for Subgroup[1] defining: LTV 1: Sampling_Frequency: 48000 Hz LTV 2: Frame_Duration: 10 ms LTV 3: Octets_Per_Codec_Frame: 100 octets |

|

|

2 |

Metadata_Length[1] |

1 |

Length of Subgroup[1] Metadata: 0x09 octets |

|

|

2 |

Metadata[1] |

9 |

2 LTV structures for Subgroup[1] defining: LTV 1: Streaming_Audio_Contexts: Media LTV 2: Language: English |

|

|

3 |

BIS_index[1[0]] |

1 |

0x03 |

|

|

3 |

Codec_Specific_Configuration_Length[1[0]] |

1 |

Length of the Codec_Specific_Configuration for BIS_index 0x03: 0x06 octets |

|

|

3 |

Codec_Specific_Configuration[1[0]] |

6 |

1 LTV structure for BIS_Index 0x03, defining: LTV 1 = Audio_Channel_Allocation: FL |

|

|

3 |

BIS_index[1[1]] |

1 |

0x04 |

|

|

3 |

Codec_Specific_Configuration_Length[1[1]] |

1 |

Length of the Codec_Specific_Configuration for BIS_index 0x04: 6 octets |

|

|

3 |

Codec_Specific_Configuration[1[1]] |

6 |

1 LTV structure for BIS_Index 0x04, defining: LTV 1 = Audio_Channel_Allocation: FR |

|

3.8. Broadcast Sink support requirements

This section defines support requirements for the Broadcast Sink role.

The Broadcast Sink shall instantiate one Published Audio Capabilities Service.

3.8.1. ATT and EATT transport requirements

The Broadcast Sink shall support a minimum ATT_MTU of 64 octets for one Unenhanced ATT bearer or for at least one Enhanced ATT bearer if the Broadcast Sink supports Enhanced ATT bearers.

3.8.2. Additional Published Audio Capabilities service requirements

This section defines additional requirements for the Broadcast Sink beyond those defined in PACS [5].

The Broadcast Sink shall support reception and decoding of audio data that is encoded using the settings defined as Mandatory in Table 3.17. The Broadcast Sink may support reception and decoding of audio data that is encoded using settings defined as Optional in Table 3.17 or any other settings defined by an implementation or by a higher-layer specification.

The Broadcast Sink shall expose all supported audio capability settings in one or more Sink PAC characteristics containing one or more PAC records.

|

Codec Capability Setting |

Codec_ID |

Codec_Specific_Capabilities (Defined in PACS [5]) |

Requirement |

||

|---|---|---|---|---|---|

|

Supported_Sampling_Frequencies (kHz) (Section 4.3.1) |

Supported_Frame_Durations (ms) (Section 4.3.1) |

Supported_Octets per_Codec_Frame (Octets) (Section 4.3.1) |

|||

|

8_1 |

LC3 |

8 |

7.5 |

261 (27.734 kbps2) |

O |

|

8_2 |

LC3 |

8 |

10 |

301 (24 kbps2) |

O |

|

16_1 |

LC3 |

16 |

7.5 |

301 (32 kbps2) |

O |

|

16_2 |

LC3 |

16 |

10 |

401 (32 kbps2) |

M |

|

24_1 |

LC3 |

24 |

7.5 |

451 (48 kbps2) |

O |

|

24_2 |

LC3 |

24 |

10 |

601 (48 kbps2) |

M |

|

32_1 |

LC3 |

32 |

7.5 |

601 (64 kbps2) |

O |

|

32_2 |

LC3 |

32 |

10 |

801 (64 kbps2) |

O |

|

441_1 |

LC3 |

44.1 |

8.1633 |

971 (95.06 kbps2) |

O |

|

441_2 |

LC3 |

44.1 |

10.8843 |

1301 (95.55 kbps2) |

O |

|

48_1 |

LC3 |

48 |

7.5 |

751 (80 kbps2) |

O |

|

48_2 |

LC3 |

48 |

10 |

1001 (80 kbps2) |

O |

|

48_3 |

LC3 |

48 |

7.5 |

901 (96 kbps2) |

O |

|

48_4 |

LC3 |

48 |

10 |

1201 (96 kbps2) |

O |

|

48_5 |

LC3 |

48 |

7.5 |

1171 (124.8 kbps2) |

O |

|

48_6 |

LC3 |

48 |

10 |

1551 (124 kbps2) |

O |

|

1 The supported range shall include this value. 2 Bit rates are calculated according to Section 3.2.5 in [7]. 3 Effective frame durations. The 44.1 kHz sampling rate results in a deviation from the 7.5 ms/10 ms frame durations that can be exposed using the Supported_Frame_Durations LTV structure. For 44.1 kHz/7.5ms the actual frame duration is equivalent to 360 (samples per frame) divided by 44100 (samples per second), which equals 8.16327 ms per frame, and for 44.1 kHz/10 ms the actual frame duration is equal to 480 (samples per frame) divided by 44100 (samples per second), which equals 10.88435 ms per frame. The LC3 [7] codec encodes 97 octets (for 7.5 ms/8.163 ms effective) or 130 octets (for 10 ms/10.884 ms effective) into each SDU, which arrives at the controller every 8.16327 ms or 10.88435 ms. The transmitting device assigns a time offset to each SDU and delivers the time_offset with each SDU at the receiver, as defined in Volume 6, Part G, Section 3.1 in [1]. Determination of the time_offset parameter at the transmitting device is implementation-specific. Compensation for the difference between 8.16327 ms and 8.163 ms, and/or compensation between 10.88435 ms and 10.884 ms, is implementation-specific. |

|||||

If the Broadcast Sink supports vendor-specific codec audio capabilities, the Broadcast Sink shall use the format defined in Table 3.18 when populating the Codec_ID field in PAC records, exposing vendor-specific audio capabilities.

|

Parameter |

Value |

|---|---|

|

Codec_ID |

Octet 0: 0xFF = Vendor-specific Coding_Format Octet 1–2: Company ID Company ID values are defined in Bluetooth Assigned Numbers [2]. Octet 3–4: Vendor-specific codec_ID |

3.8.2.1. Audio data Context Type support requirements

The Broadcast Sink shall support the Context Type value defined as ‘unspecified’ in the Supported_Sink_Contexts field of the Supported Audio Contexts characteristic.

3.9. Scan Delegator support requirements

The Scan Delegator shall instantiate one Broadcast Audio Scan Service.

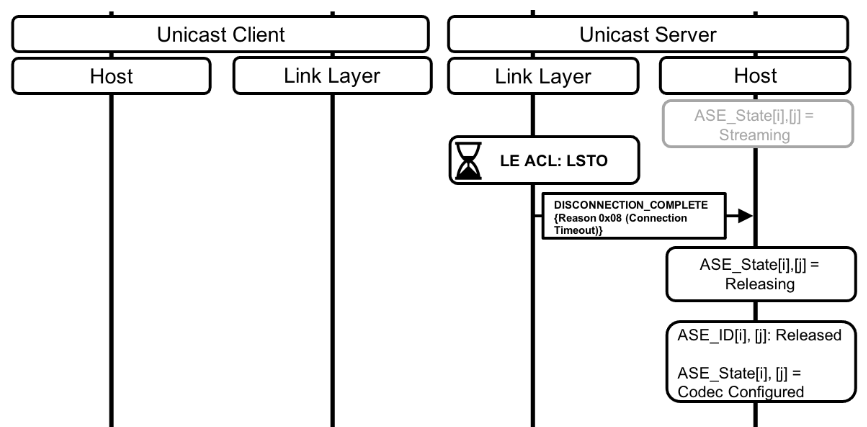

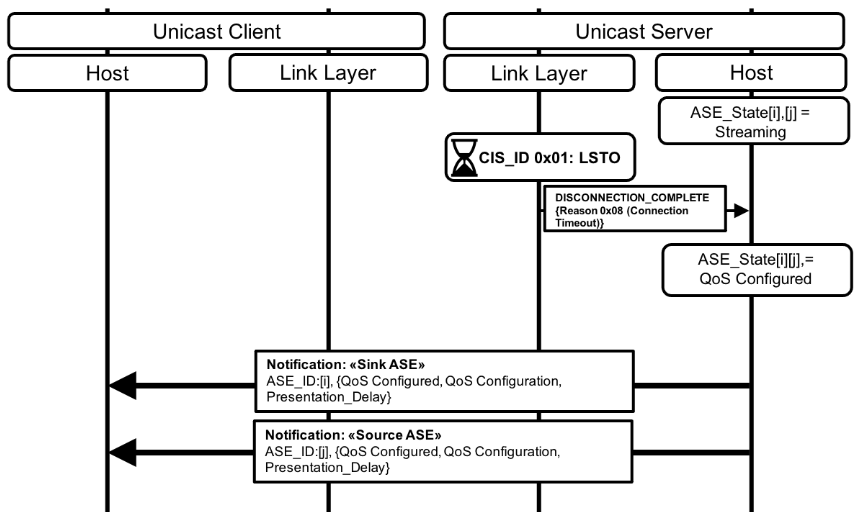

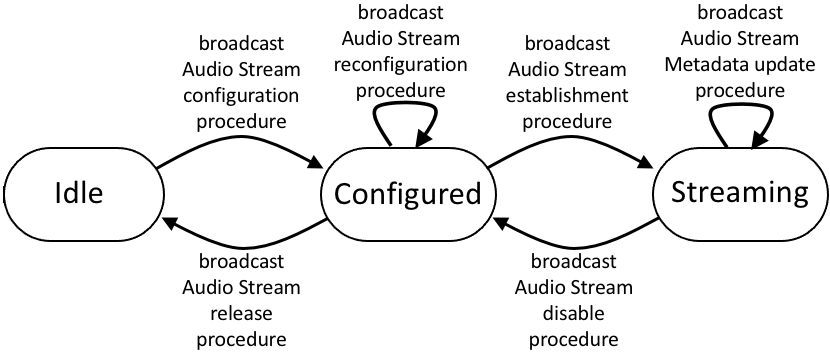

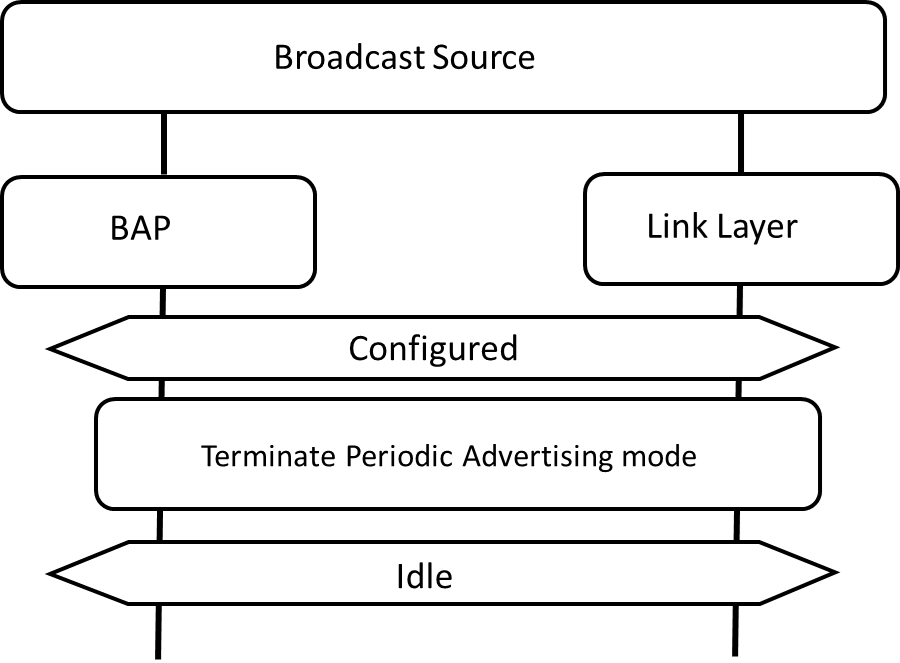

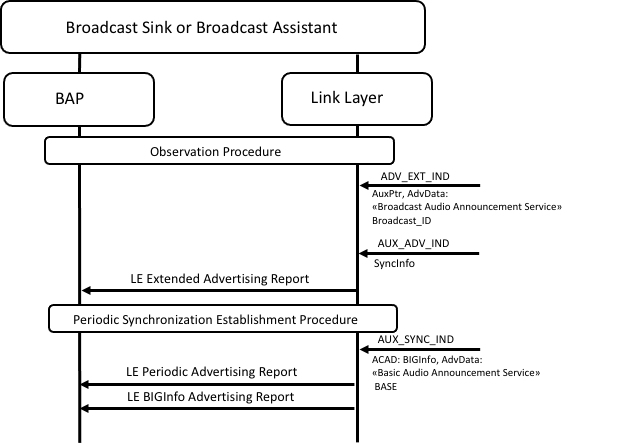

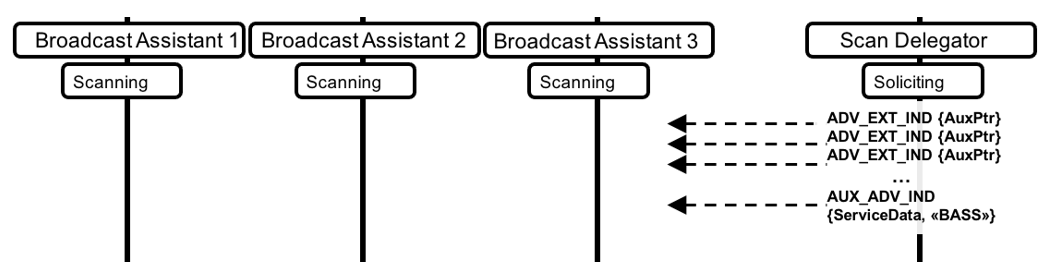

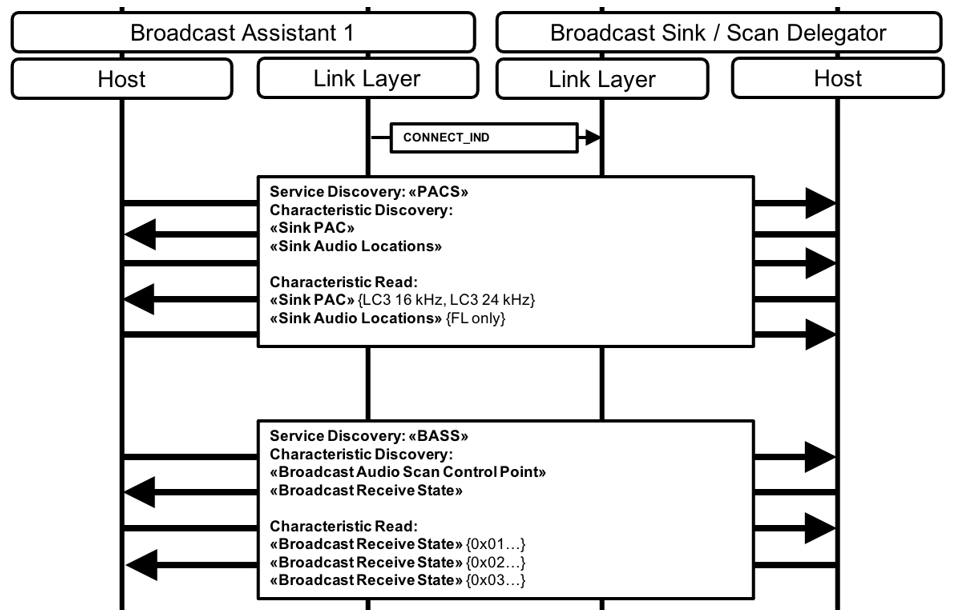

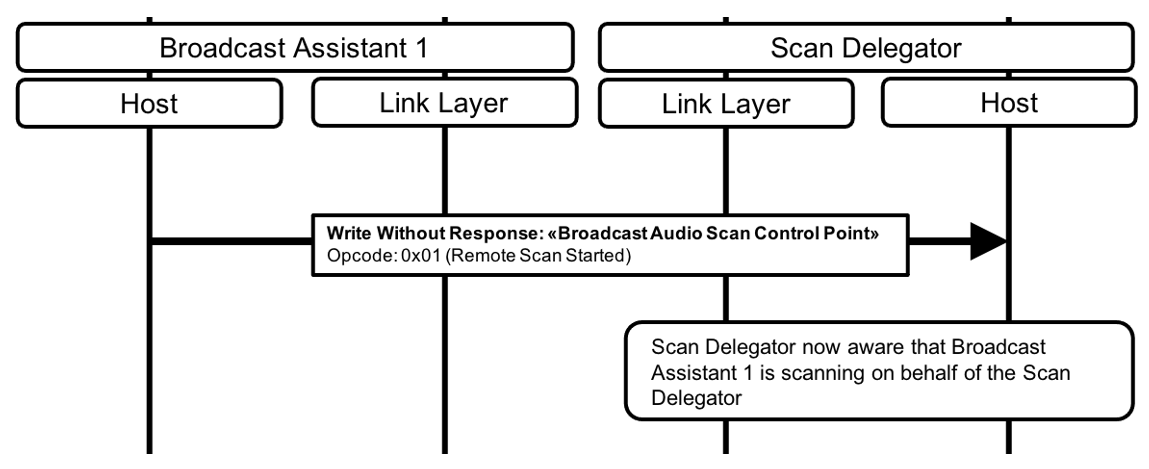

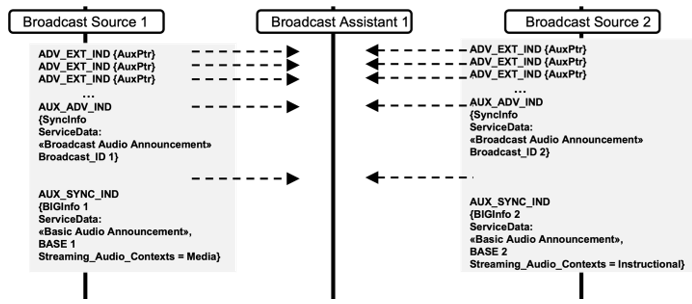

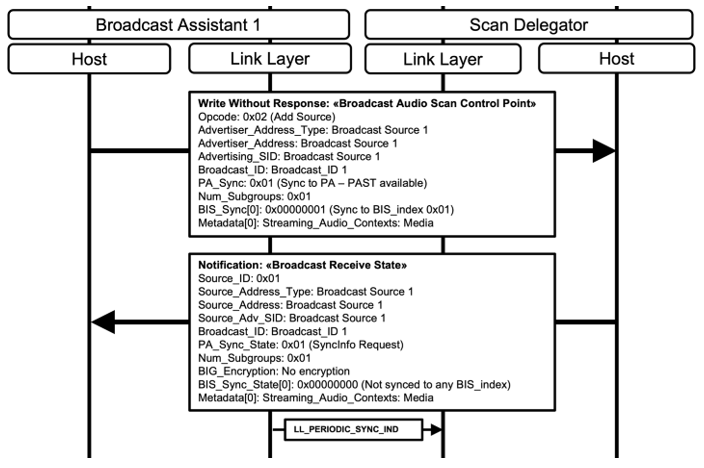

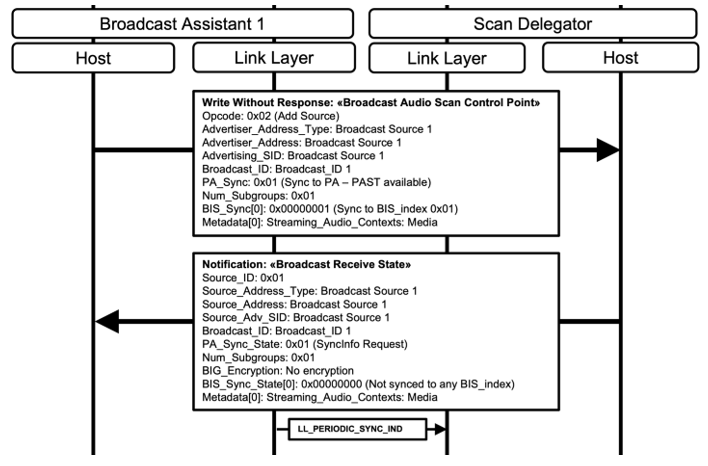

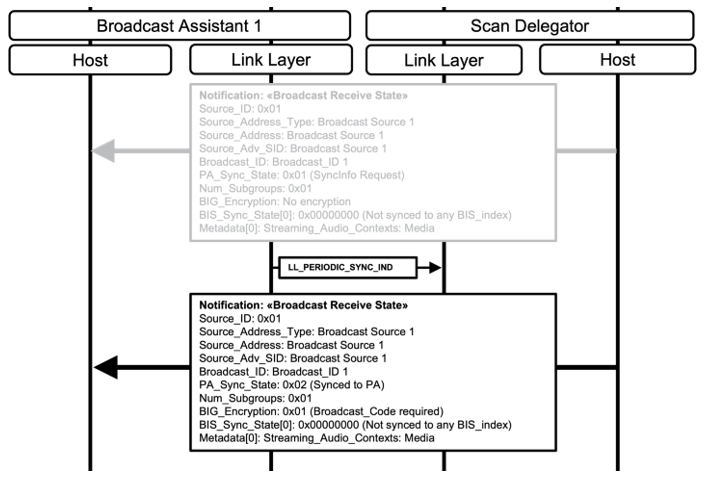

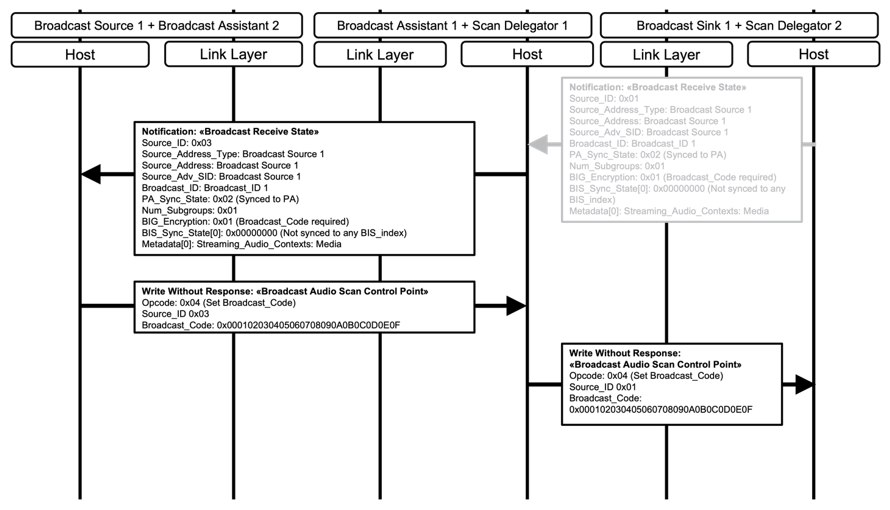

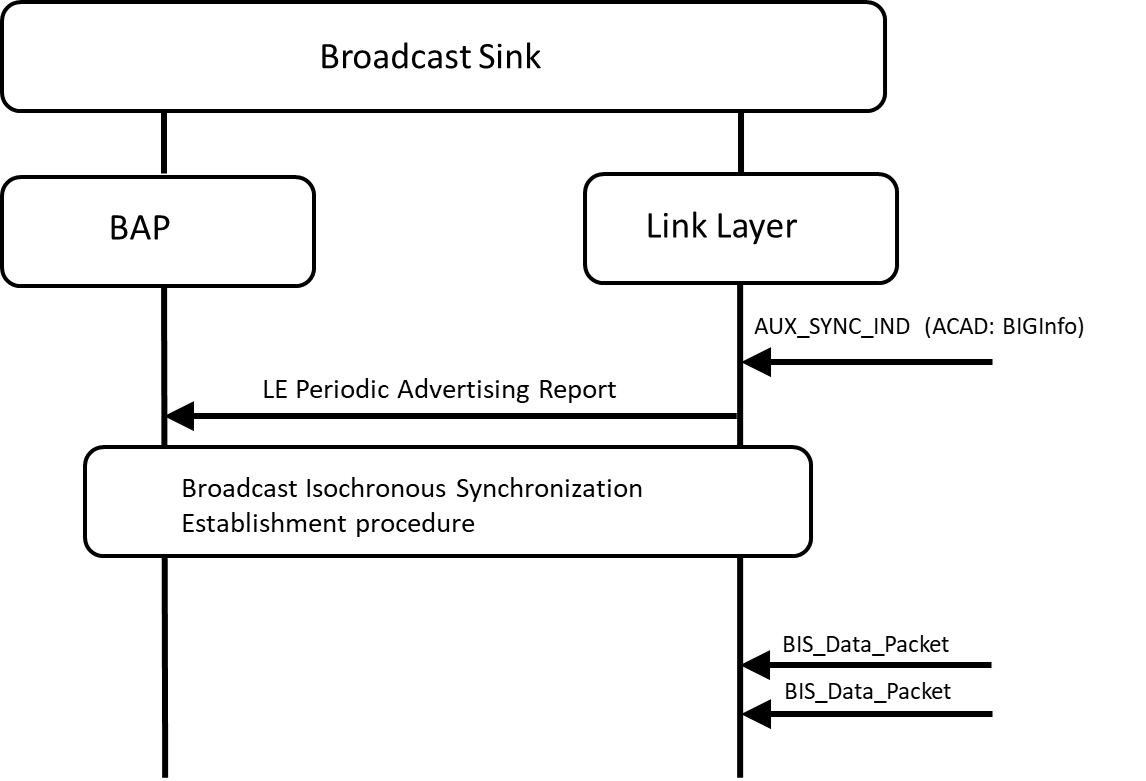

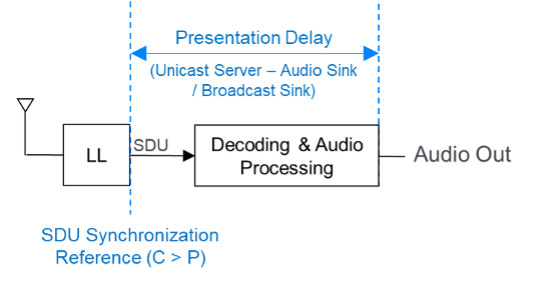

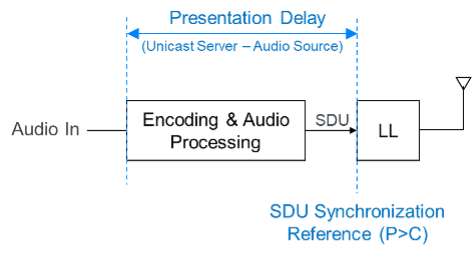

3.9.1. ATT and EATT transport requirements